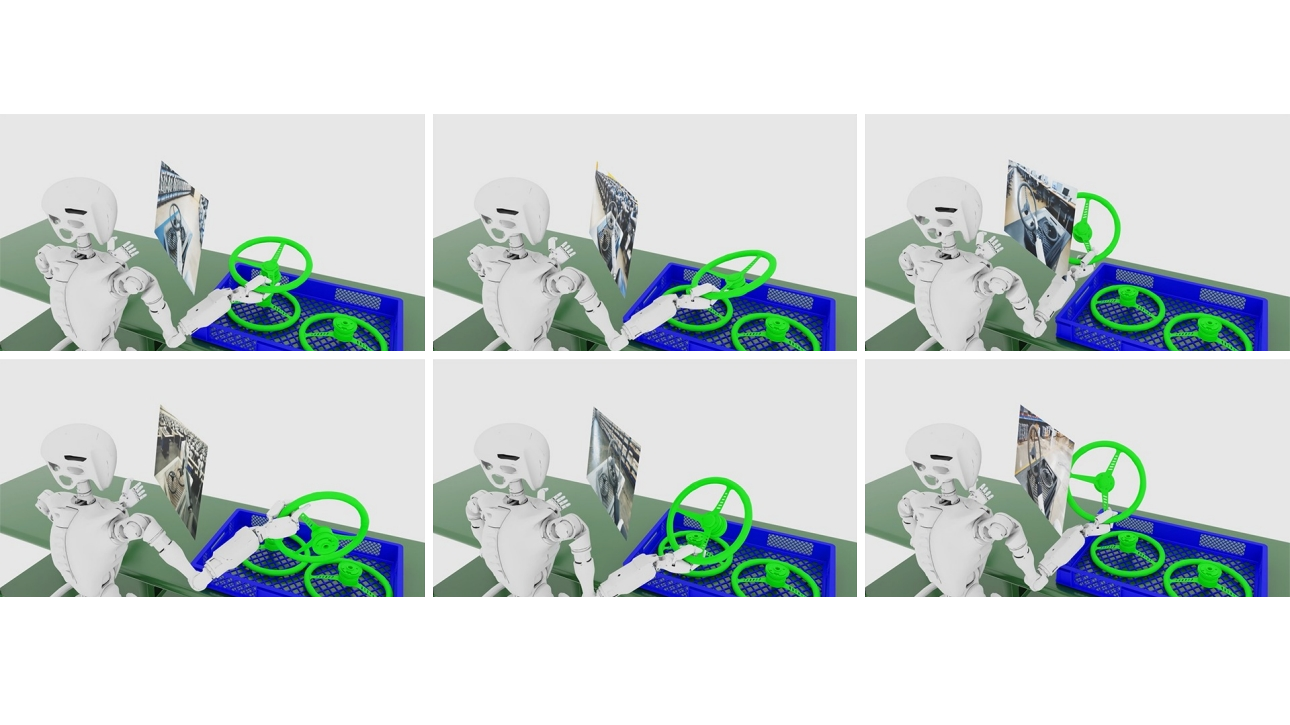

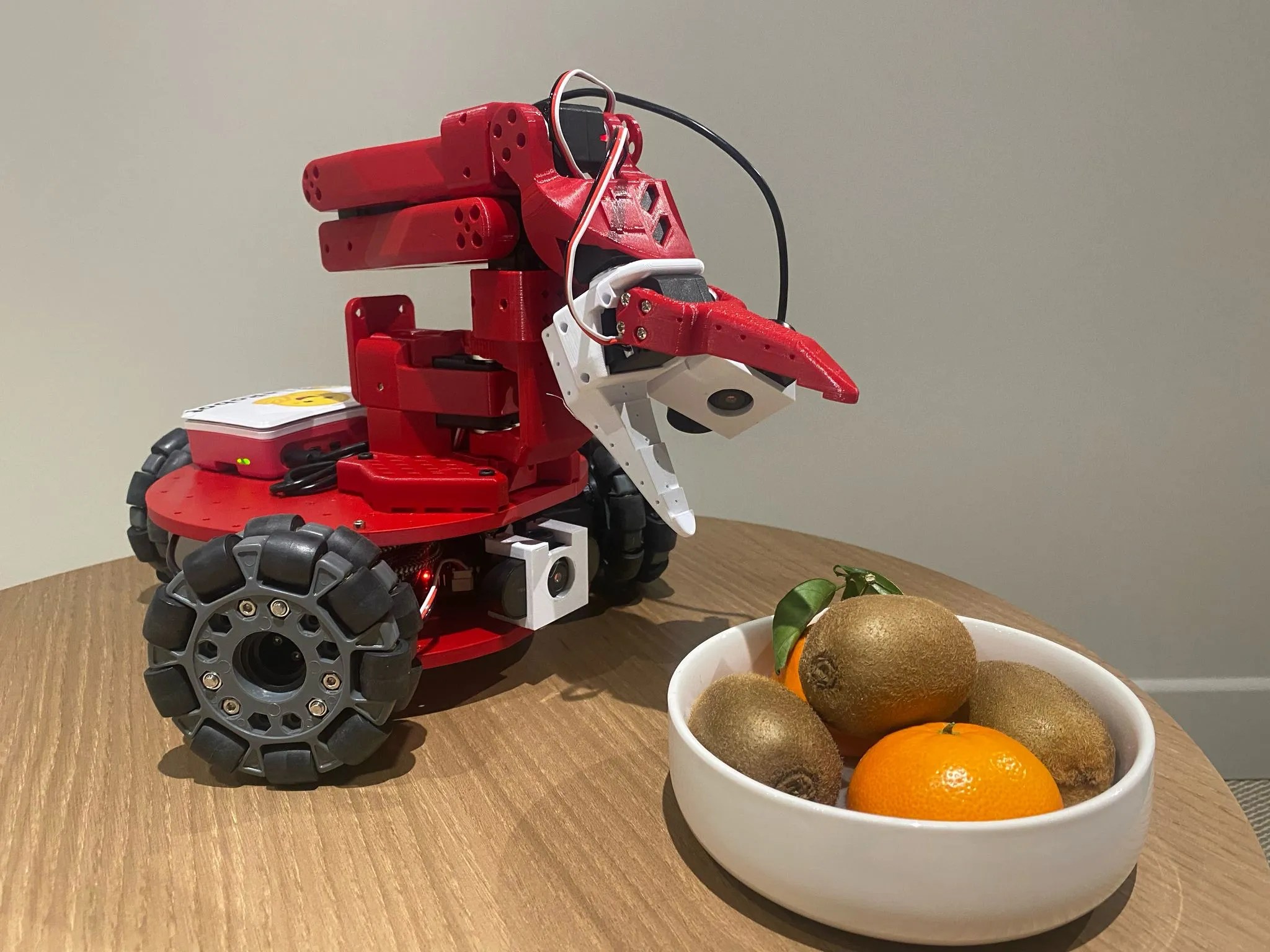

Cosmos leverages pre-trained world foundation models, fine-tuned using autoregressive and diffusion architectures trained on 9,000 trillion tokens from diverse sources, including synthetic environments and video data. By enabling developers to transform 3D-rendered scenes into photorealistic videos, Cosmos facilitates the simulation of complex scenarios—such as humanoid robots executing advanced tasks or autonomous driving models in realistic conditions. This approach creates vast, diverse datasets that accelerate robust AI training and development.