NVIDIA Jetson with Autodistill: Deploy Computer Vision with Efficient Auto-labeling Method

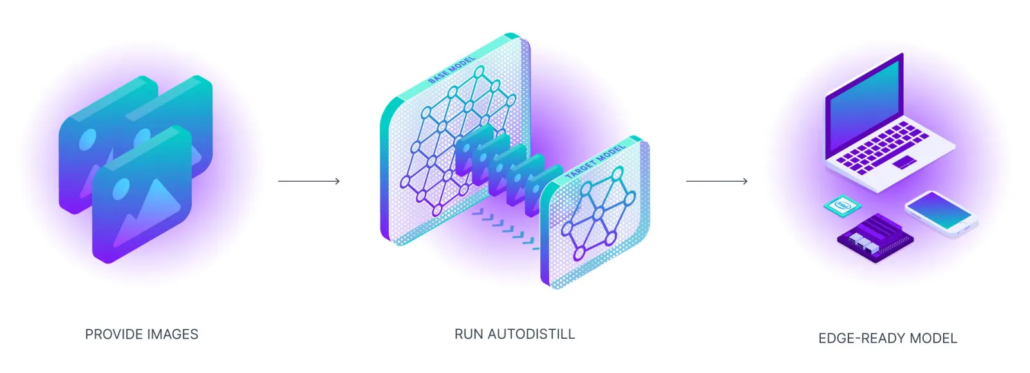

Before releasing the power of the Jetson Edge device for AI inferencing and processing, there’s always a key issue with time-consuming effort and much resources input on it, which is dataset labeling preparation. Traditionally, annotating image data in a manual way could be accurate for model training but should be within a long time process. Autodistill with Grounded-SAM and YOLOv8 can help you simplify the process by automatically labeling training data for edge-developing scenarios.

With Roboflow’s Colab guidance, it’s quite easy to get started with the annotation part and build your own PyTorch model to be ready for edge deployment.

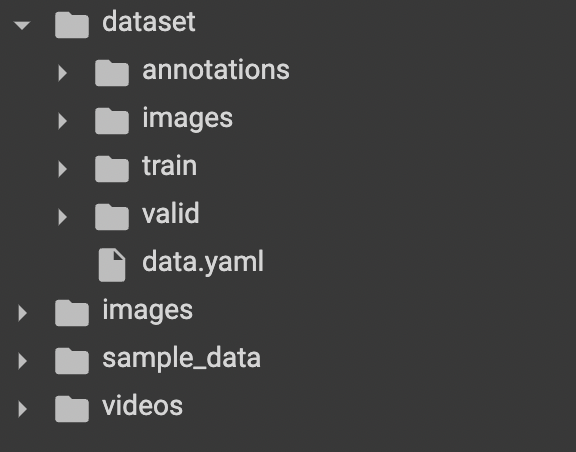

Dataset Preparation

After checking the GPU environment and finishing all necessary packages installations, we can create images and videos directories to store data sources and pre-annotated images:

In real-life applications, we usually want to analyze some pinpoints from the video streaming rather than a huge volume of images waiting for us there as a dataset. For this annotation part, I prepared 10 videos ready to use. The first thing we need to do is convert these videos into images by saving every 10th frame from each video. Then I set the first three videos should be used in the testing part to evaluate the model, and the other seven were used for the training part.

import supervision as sv

from tqdm.notebook import tqdm

video_paths = sv.list_files_with_extensions(

directory=VIDEO_DIR_PATH,

extensions=["mov", "mp4"])

TEST_VIDEO_PATHS, TRAIN_VIDEO_PATHS = video_paths[:2], video_paths[2:]

for video_path in tqdm(TRAIN_VIDEO_PATHS):

video_name = video_path.stem

image_name_pattern = video_name + "-{:05d}.png"

with sv.ImageSink(target_dir_path=IMAGE_DIR_PATH, image_name_pattern=image_name_pattern) as sink:

for image in sv.get_video_frames_generator(source_path=str(video_path), stride=FRAME_STRIDE):

sink.save_image(image=image)Autolabel Image

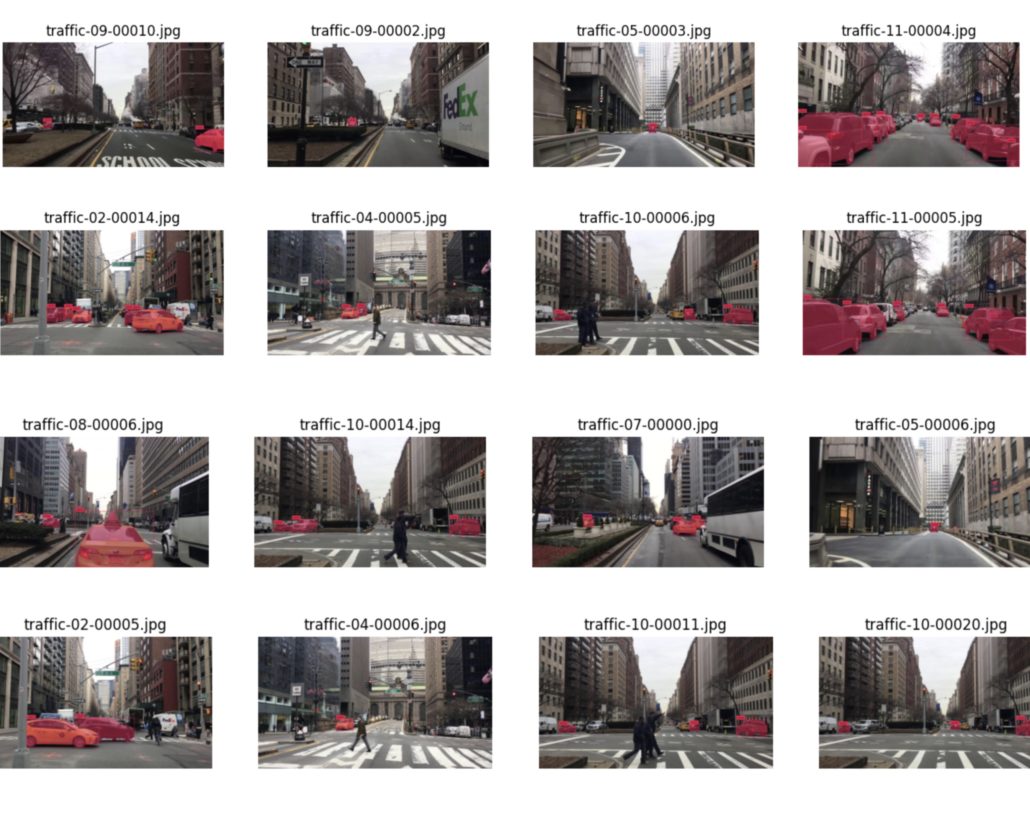

When coming to the defining ontology part for labeling, the thing is to figure out how the Base Model is prompted, how it is reflected on your dataset, and what object your target model will detect. I assume the prompt name for each object in Roboflow’s original data library should be common words, so I used “car” and it indeed worked.

from autodistill.detection import CaptionOntology

ontology=CaptionOntology({

"car": "vehicle"

})However, if you are confused about using the proper prompt, such as you might have several options like “trash”, “rubbish”, and “waste”, that makes you get no idea which one is the best prompt for labeling our data, check out the blog How to Evaluate Autodistill Prompts with CVevals to give it a try, comparing the performance among similar prompt on the dataset, and pick up the best one to use.

After running the process, we’ll get the sample output with labels on the sample images there.

Benefits and Limitation

Autodistill provides significant benefits for your computer vision applications:

- Cost Efficiency and faster speed

- Consistency: reducing the potential for human errors or inconsistencies that can occur during manual labeling

- Reduction of Human Bias

However, it’s important to note that auto-labeling also comes with some limitations and considerations:

- Accuracy: might not be as accurate as manual labeling, especially in cases where complex or subtle visual features need to be annotated. Combining human review and label refining could be a much efficient choice

- Model Dependence: this method might rely on pre-trained models, making their effectiveness dependent on the quality and relevance of those models

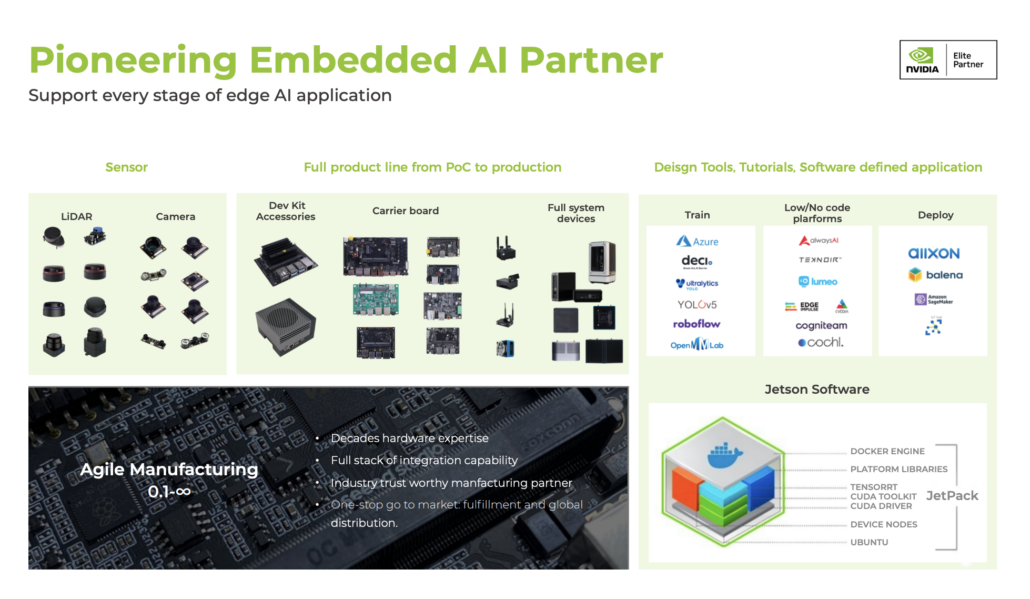

Deploy Model on NVIDIA Jetson Platform

Decide how to use autodistill based on your specific scenario, and after you get the PyTorch model there, remember to check out our wiki which shows you how to deploy it on NVIDIA Jetson platform and even provide the method of improving the inferencing porformance using TensorRT! Check this out!

Seeed NVIDIA Jetson Ecosystem

Seeed is an Elite partner for edge AI in the NVIDIA Partner Network. Explore more carrier boards, full system devices, customization services, use cases, and developer tools on Seeed’s NVIDIA Jetson ecosystem page.

Join the forefront of AI innovation with us! Harness the power of cutting-edge hardware and technology to revolutionize the deployment of machine learning in the real world across industries. Be a part of our mission to provide developers and enterprises with the best ML solutions available. Check out our successful case study catalog to discover more edge AI possibilities!

Take the first step and send us an email at [email protected] to become a part of this exciting journey!

Download our latest Jetson Catalog to find one option that suits you well. If you can’t find the off-the-shelf Jetson hardware solution for your needs, please check out our customization services, and submit a new product inquiry to us at [email protected] for evaluation.