What is MiniGPT-4? A Deep Dive and Deploy on Jetson Orin

For complex computer vision tasks, we usually require machines not only to interpret complex visual data but also to comprehend the contextual intricacies through language. That’s the power of the vision-language model with multimodal capabilities, enhancing the accuracy and depth of object detection, and moreover, providing huge potential for more intuitive human-machine interaction. MiniGPT-4 is one of the interesting applications that we can dive into the world of multimodal LLM.

Here we are going to talk about how the miniGPT-4 tech behind these generative AI applications are built, where they can be deployed, and how to release the most power of them on NVIDIA Jetson Orin. To explore more possibilities of generative AI tech, feel free to go through the guidance of Jetson Generative AI Lab to have a try!

What is MiniGPT-4

MiniGPT-4 is a lightweight version of the vision-language model quite similar to ChatGPT. It is developed to verify if the sophisticated large language model can enhance the power of multimodal generation capability (We’ll talk about multimodal deep learning in the following parts).

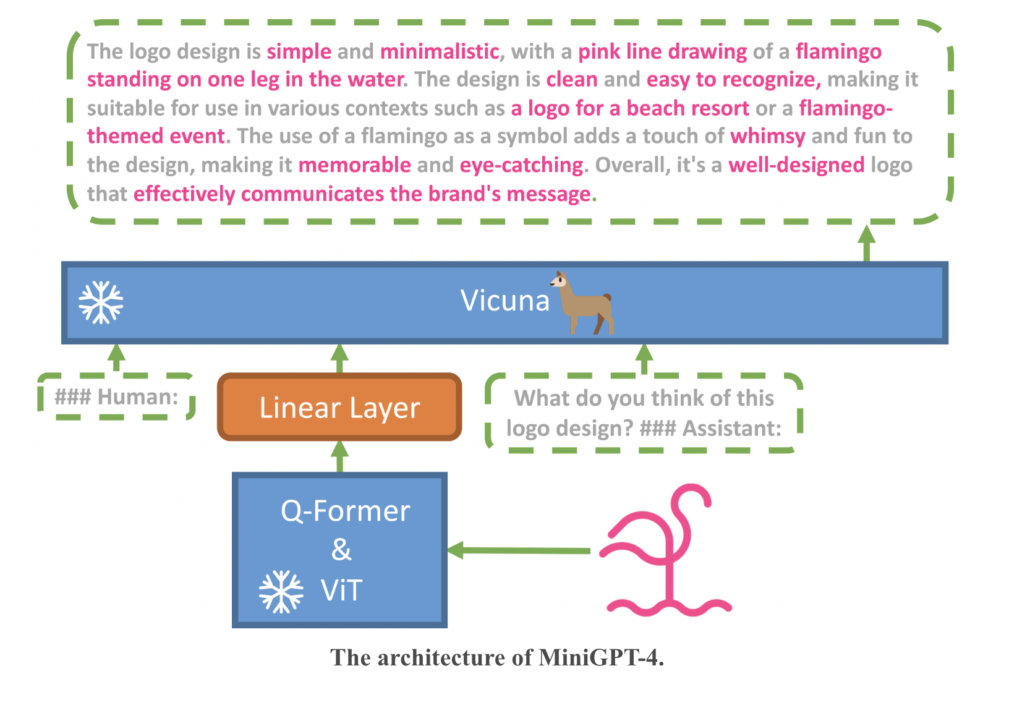

By aligning a frozen visual encoder which contains the pre-trained ViT and Q-Former with a frozen LLM – Vicuna, using only one projection layer, miniGPT-4 demonstrates many advanced multimodal capabilities similar to GPT-4, such as generating detailed image descriptions and creating websites from hand-drawn sketches, even extending to being able to write poems or giving guidance based on a given image.

To produce a more natural language output for the machine, it’s important to eliminate the interference of noise: fine-tune the model with a detailed image description dataset rather than just using short image captions. All is for the improvement of the model’s generation reliability and better usability.

MiniGPT-4 Methods by Two Stages

1. During the pre-train process, align image-text pairs with huge amounts of data collections

- The entire process walks through 20,000 steps within about 10 hours to complete, using around 5 million image-text pairs with a batch size of 256.

- It turns out that the initial training shows the great power of rich knowledge to be well-responsive to human queries. However, those outputs can not be fully guaranteed to be aligned with human intentions accurately.

2. Vision-language alignment – Fix description error in data post-processing to fine-tune the model

- Use ChatGPT to remove repeated/unnecessary sentences in the descriptions generated based on randomly chosen 5,000 images.

- Verify the correctness of each image description manually. It turns out there are 3,500 images that can be high-quality image-text pairs input for the next fine-tuning part.

- Besides identifying objects in images, which is the same as the BLIP-2 vision-language model, miniGPT-4 can also show the capability of understanding the retrieval of information.

Deploy MiniGPT-4 on Jetson is Easy and Fluent!

To build up your own local secure inferencing server which is independent of network limitation, it’s a considerable choice to deploy miniGPT-4 on NVIDIA Jetson AGX Orin. If you have already explored the Jetson Generative AI Lab, you might already know the basic setup workflow for miniGPT-4. Now, you can easily run it on Jetson by following these steps:

1. Get one Jetson AGX Orin Edge device and flash the system by checking this wiki.

2. Run the following command in the terminal, install packages, and run miniGPT-4.

git clone https://github.com/dusty-nv/jetson-containers

cd jetson-containers

sudo apt update; sudo apt install -y python3-pip

pip3 install -r requirements.txt

./run.sh $(./autotag minigpt4) /bin/bash -c 'cd /opt/minigpt4.cpp/minigpt4 && python3 webui.py \

$(huggingface-downloader --type=dataset maknee/minigpt4-13b-ggml/minigpt4-13B-f16.bin) \

$(huggingface-downloader --type=dataset maknee/ggml-vicuna-v0-quantized/ggml-vicuna-13B-v0-q5_k.bin)'3. Open a browser on the same network and enter > http://<Jetson_Device_IP>:7860

Enjoy your personal AI Chatbot!

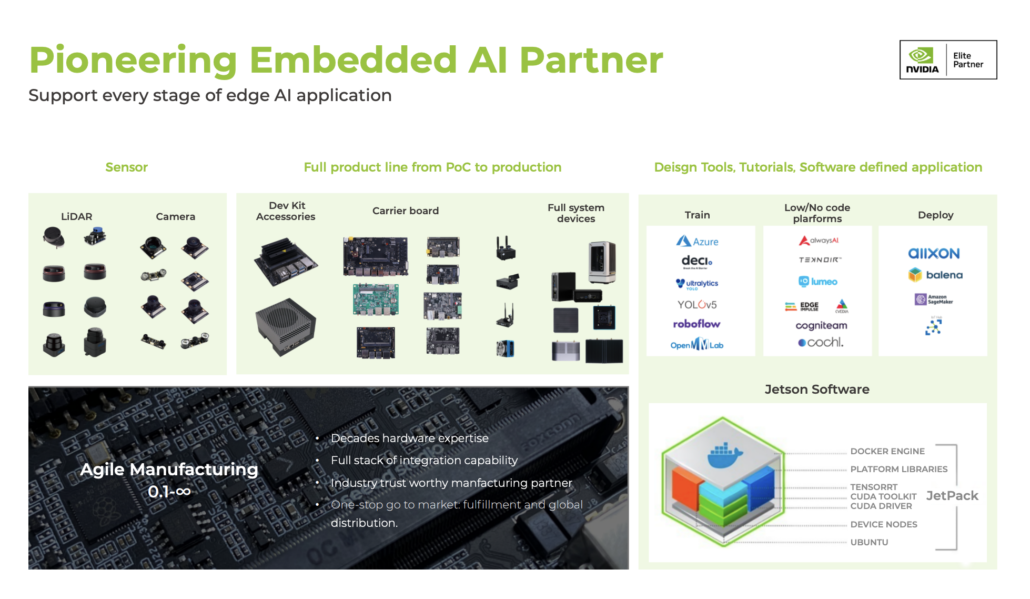

Seeed: NVIDIA Jetson Ecosystem Partner

Seeed is an Elite partner for edge AI in the NVIDIA Partner Network. Explore more carrier boards, full system devices, customization services, use cases, and developer tools on Seeed’s NVIDIA Jetson ecosystem page.

Join the forefront of AI innovation with us! Harness the power of cutting-edge hardware and technology to revolutionize the deployment of machine learning in the real world across industries. Be a part of our mission to provide developers and enterprises with the best ML solutions available. Check out our successful case study catalog to discover more edge AI possibilities!

Take the first step and send us an email at [email protected] to become a part of this exciting journey!

Download our latest Jetson Catalog to find one option that suits you well. If you can’t find the off-the-shelf Jetson hardware solution for your needs, please check out our customization services, and submit a new product inquiry to us at [email protected] for evaluation.