Reachy Mini: An Open Journey Built Together with Hugging Face, Pollen Robotics, & Seeed Studio

Today at CES 2026, NVIDIA unveiled a world of new open models to enable the future of agents, online and in the real world.

Click to watch NVIDIA Live with CEO Jensen Huang at CES2026

From the recently released NVIDIA Nemotron reasoning LLMs to the new NVIDIA Isaac GR00T N1.6 open reasoning VLA and NVIDIA Cosmos world foundation models, all the building blocks are here today for AI Builders to build their own agents.

In the CES keynote today, Jensen Huang demonstrated how to do exactly that, using the processing power of NVIDIA DGX Spark with Reachy Mini, an open-source desktop robot by Hugging Face and Pollen Robotics, to create your own personal office R2-D2 that you can interact with and collaborate on projects.

Last month marked a significant milestone. Together with Hugging Face, Pollen Robotics, & Seeed Studio, we shipped 3,000 units of Reachy Mini. Within five months, what began as an open-source idea has become a real desktop robot on desks across the globe — a small but important step toward making robotics more open, collaborative, and accessible to everyone.

Meet Reachy Mini, on the CES Stage

Reachy Mini is Hugging Face’s first open-source desktop robot, created for learning, creativity, and exploring human-robot interaction. Designed to be expressive, programmable, and approachable, it invites people to experiment with AI and robotics directly from their desks.

With its fully open-source hardware, software, and simulation environments, Reachy Mini is more than a product — it’s a platform. One that encourages curiosity, hands-on learning, and community-driven innovation.

Whether you’re an AI developer, researcher, educator, robotics enthusiast, or simply coding with your kids on the weekend, Reachy Mini makes it possible to develop, test, deploy, and share real-world AI applications using the latest open models and tools.

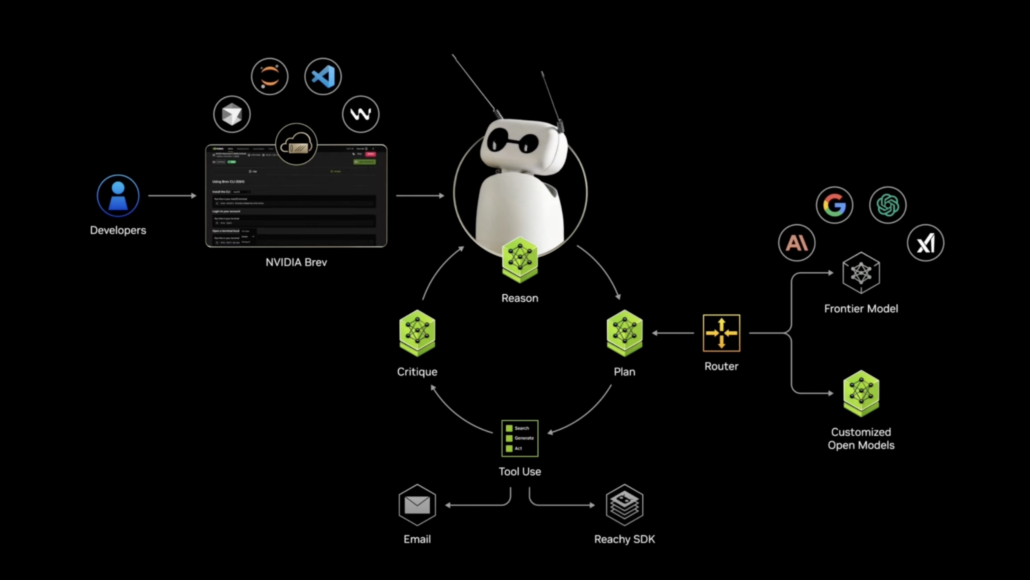

Reachy Mini served as the physical avatar for this cutting-edge AI brain during the demo:

- The Technical Setup: Running on a DGX Spark personal cloud, the system utilized an intent-based model router. This smart architecture decides in real-time whether to process tasks locally for privacy or route them to remote large models for complex reasoning.

- Tool Calls & Control: The AI agent used “tool calls” to control Reachy’s head, ears, and camera, while integrating with Eleven Labs API to give it a natural voice.

- Multimodal Capabilities: In the demo, Reachy didn’t just chat. It managed a grocery list (eggs, milk, butter), executed code to send script updates, and even turned a hand-drawn sketch into a full architectural rendering.

- Home Interaction: Most impressively, Reachy demonstrated real-time home monitoring.

A Conversation on Open Robotics

In November 2025, as part of this journey, Pollen Robotics CEO Matthieu Lapeyre and Seeed Studio CEO Eric Pan sat down to reflect on how Reachy Mini came to life — and where it may be heading.

(🎥 Video: Seeed Studio CEO Eric × Pollen Robotics CEO Matthieu)

Matthieu shared that when Reachy Mini was first announced in July, it was still a 3D-printed prototype, In November, instead, over 3,000 orders arrived within a week, pushing the team to move quickly from prototyping to industrial production together with Seeed Studio.

Voice interaction has always been central to Reachy Mini’s design. With no screen or keyboard, the robot is meant to be spoken to directly — a choice that brought real acoustic challenges due to motor noise. Through close collaboration and multiple iterations, the teams achieved clear voice capture even while the robot is moving. Eric also spoke about Seeed’s decade-long experience in audio processing, which helped address the challenge of noise reduction during motion. Beyond engineering, he expressed his appreciation for Reachy Mini’s expressive design, noting how its large, friendly eyes give it a distinctive character among humanoid robots.

Matthieu also emphasized Reachy Mini’s openness: a simple white shell designed to be customized, and an open software stack deeply integrated with Hugging Face, allowing developers to build and deploy AI behaviors with just a few lines of Python.

Eric highlighted the shared engineering mindset behind the partnership. He described Matthieu not as a typical CEO, but as a hands-on, full-stack engineer — deeply involved in both software and hardware. This level of engagement made close, fast-paced collaboration possible, even under extremely tight timelines.

With a shared belief in open source and community, Reachy Mini stands as an open, collaborative gateway between people and AI — shaped as much by its community as by its creators.

From Prototype to Production, Together

With a shared mission of making technology accessible, Seeed Studio worked closely with Hugging Face and Pollen Robotics throughout the journey of Reachy Mini — from early prototypes to large-scale production — helping transform an open-source vision into a robot safely delivered to thousands of users worldwide.

The collaboration moved at an extraordinary pace. In less than five months, teams across companies and continents came together, spanning mechanical design, electronics, acoustics, manufacturing, and supply chain coordination. Different engineering teams worked closely, iterated quickly, and solved problems as one team.

One of the most complex challenges came from acoustics. With multiple motors operating simultaneously, motor noise could easily interfere with the microphone array. Through repeated acoustic experiments and joint optimization — from structural adjustments to algorithm-level tuning — the teams steadily improved noise reduction and overall audio performance on reSpeaker Microphone Array.

As the project entered its final stage, coordination became even more critical. In a short but intense final sprint, Seeed’s logistics team completed the shipment of 3,000 Reachy Mini units in just three days, ensuring they arrived safely and on time. For thousands of users around the world, Reachy Mini became their Christmas gift — a memorable moment that reflected the collective effort behind the project.

An Open, Community-Powered Ecosystem

Reachy Mini lives at the intersection of open technology and a global developer community:

- 🤗 Hugging Face Integration: Use state-of-the-art open-source models for speech, vision, and personality.

- Open-Source Everything: Hardware, software, and simulation environments fully open-source and community-supported. Stay tuned for the hardware release!

- Community-Driven: Upload, share and download robot behaviors with the 10M users of the Hugging Face community.

This ecosystem means Reachy Mini is not just something you use — it’s something you help shape and build.

Who Is Reachy Mini For?

Reachy Mini is designed for anyone curious about embodied AI:

- AI developers prototyping embodied intelligence

- Researchers and educators teaching and studying human-robot interaction

- Robotics enthusiasts looking for an open, extensible desktop robot

- Families and weekend makers exploring creative coding together

Looking Ahead

We’re inspired by what the community is already building with Reachy Mini, and we’re excited to see what comes next. To everyone who has received their robot — we can’t wait to see what you create, share, and learn.

This journey also reflects Seeed Studio’s ongoing commitment to lowering the barrier to robotics and embodied AI. Beyond Reachy Mini, we continue to support a growing lineup of open-source robotics kits and platforms — including SO-ARM101, Amazing Hand, LeKiwi, as well as NVIDIA Jetson–based platforms for advanced AI computing. Together, they are designed to work seamlessly with the Hugging Face LeRobot ecosystem, enabling end-to-end robot learning, experimentation, and real-world deployment.

Find more cool robots at Seeed AI Robotics

The era of Physical AI is here. We invite you to join us in exploring the advanced robotics components and hardware technologies that bring these intelligent agents to life. From sensing and perception to computation and control, let’s push the boundaries of Embodied AI together—building the physical AI infrastructure that turns code into reality.

Seeed NVIDIA Jetson Ecosystem

Seeed is an Elite partner for edge AI in the NVIDIA Partner Network. Explore more carrier boards, full system devices, customization services, use cases, and developer tools on Seeed’s NVIDIA Jetson ecosystem page.

Stands at the forefront of global hardware innovation, we commit to making technology accessible to all. Specializing in IoT and AI, Seeed offers a spectrum of open hardware, design, and agile manufacturing aimed at lowering the threshold of hardware innovation.