Empowering Edge Computing: Harnessing the Power of Edge Impulse’s ‘Bring Your Own Model’ Feature to Deploy Multiple Custom AI Models on a Single Edge Device

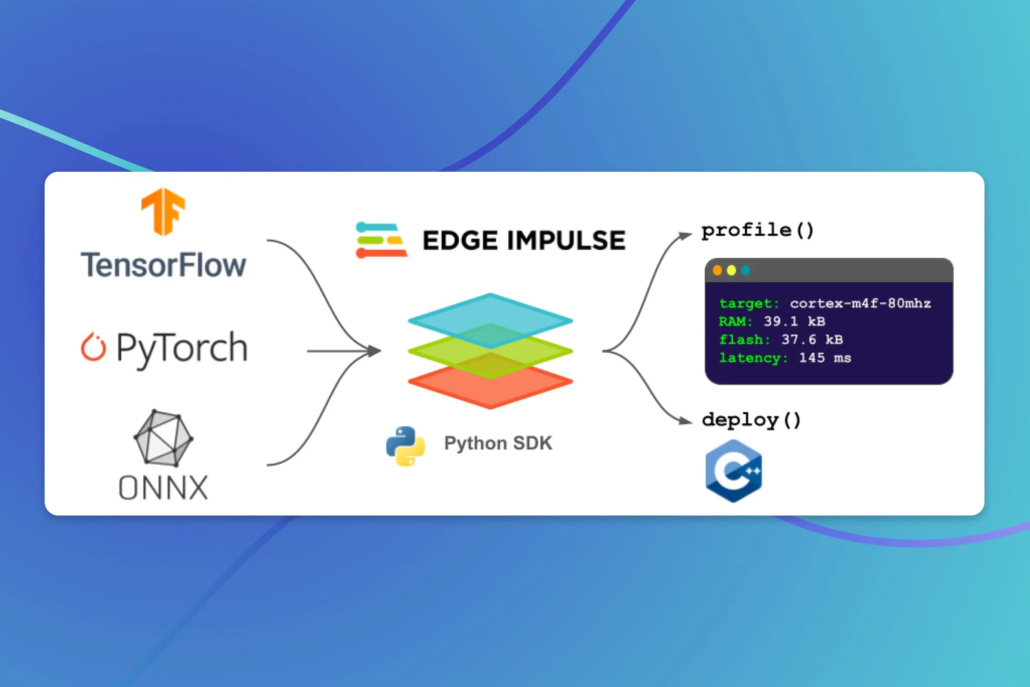

In today’s data-driven world, the field of machine learning continues to push boundaries, enabling innovative solutions to complex problems. Edge Impulse, a cutting-edge platform in the realm of embedded machine learning and Seeed Studio’s strategic software partner, has recently introduced an exciting new feature called “Bring Your Own Model” (BYOM). This functionality empowers developers especially ML experts to unleash their creativity, leverage their expertise, and seamlessly integrate their own pretrained machine learning models(TensorFlow SavedModel, ONNX, or TensorFlow Lite) into the Edge Impulse ecosystem and deploy them to various edge devices.

In this blog post, we will explore the concept of BYOM, its benefits, an example of bringing an EdgeLab edgelab-fomo model into Edge Impulse and a range of TinyML-enable products from Seeed Studio.

Unlocking the Benefits of Bring Your Own Model (BYOM)

Flexibility and Customization:

The ability to bring your own model offers developers unparalleled flexibility and customization. Whether you have developed a state-of-the-art deep learning model or a lightweight machine learning algorithm tailored to a specific domain, BYOM enables you to seamlessly integrate it into the Edge Impulse workflow. This flexibility allows you to leverage your expertise and utilize models that precisely cater to the unique requirements of your edge applications.

Optimized Performance and Accuracy:

One of the key advantages of BYOM is the potential for improved performance and accuracy. Since developers can now use models specifically designed for their use case, they can optimize them to achieve exceptional results. Fine-tuning the model’s architecture, hyperparameters, and training process can lead to significant performance gains, ensuring that your edge application meets the highest standards.

Accelerating Time-to-Deployment:

BYOM simplifies the deployment process, enabling faster time-to-market for edge applications. Instead of adapting a pre-trained model or starting from scratch, developers can seamlessly integrate their own models into the Edge Impulse platform. This streamlines the development workflow, reduces implementation complexities, and accelerates the process of deploying intelligent edge applications.

Support for Multiple Platforms:

Edge Impulse’s BYOM feature supports a wide range of edge devices, allowing developers to deploy their custom models on various platforms. Whether you’re working with microcontrollers, single-board computers, or other specialized hardware, Edge Impulse provides the necessary tools and infrastructure to run your models efficiently.

Over the last few months, Edge Impulse has made consecutive announcements regarding their official support for the Grove-Vision AI Module and SenseCAP A1101 LoRaWAN®Vision AI Sensor. This means that users can now collect raw data from these devices, build models, and deploy the trained machine learning models directly onto the devices through the studio, without needing any programming skills. Now, you’re also able to easily bring your own model to Edge Impulse and deploy the model to these edge devices.

Bring a EdgeLab model to Edge Impulse

According to the custom learning block provided by Edge Impulse, integrating any training pipeline into the studio is a straightforward process, as long as users can generate TFLite or ONNX files as output. To illustrate this capability, we have taken the EdgeLab edgelab-fomo, a model for detecting reading activities, as an example. We have successfully converted it into the TFLite format and imported it into Edge Impulse with these simple steps:

- Convert the training data / training labels into Edgelab edgelab-fomo format using extract_dataset.py.

- Train edgelab-fomo model (using https://github.com/Seeed-Studio/Edgelab).

- Convert the edgelab-fomo model into TFLite format.

- Done!

Delve into the wealth of information contained within this repository.

Exploring Seeed’s TinyML-enable Product Range: Discover the Perfect Fit for Your Applications

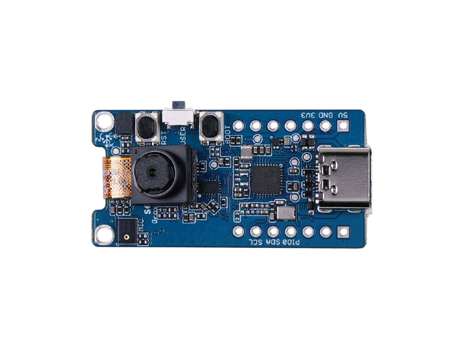

Grove Vision AI Module is a thumb-sized board based on Himax HX6537-A processor and equips with an OV2640 camera. It’s fully supported by Edge Impulse which allows you seamlessly sample raw data, build models and deploy the trained ML models to the module without any programming. It is a small and great assistant for various object detection projects and can be customized based on different needs.

SenseCAP A1101 – LoRaWAN® Vision AI Sensor is a TinyML Edge AI enabled smart image sensor. It supports a variety of AI models such as image recognition, people counting, target detection, meter recoignition etc. It also supports training models with TensorFlow Lite. With operating temperature from -40 to 85℃ and IP66 waterproof grade, it is suitable for outdoor and harsh environments IoT applications.

Seeed Studio XIAO ESP32S3 Sense leverages dual-core ESP32S3 chip, supporting both Wi-Fi and BLE wireless connectivities, which allows battery charge. It integrates built-in camera sensor, digital microphone.

Seeed Studio XIAO nRF52840 Sense is carrying Bluetooth 5.0 wireless capability and is able to operate with low power consumption. Featuring onboard IMU and PDM.

Wio Terminal is an ATSAMD51-based microcontroller with both Bluetooth and Wi-Fi Wireless connectivity powered by Realtek RTL8720DN. It is highly Integrated with a 2.4” LCD Screen, there is an onboard IMU, microphone, buzzer, microSD card slot, light sensor, and infrared emitter(IR 940nm).

Thanks to the active Seeed Studio community, we have collected pretty much case studies showcasing the applications of TinyML. These studies cover diverse areas, such as object detection, motion detection, food spoilage monitoring, lake garbage monitoring, and wildlife monitoring. By bridging the gap between technology and reality, TinyML has significantly transformed our lives.

Explore these insightful case studies in this blog and see how they can inspire you to embark on the journey of TinyML.