Deploying Machine Learning on Microcontrollers: How TinyML Enables Sound, Image, and Motion Classification

Updated on Feb 6th, 2024

TinyML, short for Tiny Machine Learning, refers to the deployment of machine learning algorithms on microcontrollers, which are small, low-power computing devices that are often used in embedded systems and Internet of Things (IoT) devices.

The goal of TinyML is to bring the power of machine learning to these small devices, enabling them to perform tasks that were previously not possible or practical. By running machine learning models on microcontrollers, TinyML makes it possible to perform tasks like voice recognition, gesture recognition, and predictive maintenance on devices that have limited computational resources, such as low memory, low power, and limited processing capabilities.

There are a number of challenges associated with implementing machine learning on microcontrollers, including limited memory and processing power, as well as the need to optimize algorithms for resource-constrained environments. However, advances in hardware and software are making it increasingly possible to implement machine learning on microcontrollers, and we are seeing a growing number of applications for TinyML in areas like healthcare, agriculture, and smart homes.

This blog post will delve into the diverse applications of TinyML in three key areas: voice recognition, gesture/motion recognition, and image classification.

TinyML in Sound Recognition

TinyML has a wide range of applications in sound recognition, which is the process of identifying and classifying sounds. Keyword spotting is the process of recognizing specific words or phrases in audio recordings. This is a common application of TinyML in sound recognition, as it enables devices like smart speakers or voice assistants to respond to specific commands. For example, a smart speaker equipped with TinyML algorithms could recognize the phrase “play music” and respond by playing a song.

The advantages of keyword spotting are numerous. First, it enables hands-free control of devices, which is particularly useful in settings where manual control is difficult or impossible, such as when driving or cooking. Second, it can improve accessibility for people with disabilities, allowing them to interact with devices using only their voice. Third, it can improve the overall user experience by making devices easier and more intuitive to use.

In terms of how keyword spotting is going to benefit our lives, the possibilities are vast and varied. For example, it could enable more efficient and natural communication with smart home devices, allowing users to control their lights, thermostats, and appliances with simple voice commands. It could also improve accessibility for people with disabilities by enabling hands-free control of devices like wheelchairs or prosthetics. Overall, keyword spotting is an exciting application of TinyML in sound recognition that has the potential to revolutionize the way we interact with technology.

Here we’ve compiled two tutorials to inspire you to build your own keyword-spotting demo using popular and common microcontrollers.

Example One: Discover the Potential of TinyML: Create Your Own Keyword Spotting Application Using XIAO nRF52840 Sense

Before Starting, let’s know about some common Voice Assistants on the market, like Google Home or Amazon Echo-Dot, which only react to humans when they are “waked up” by particular keywords such as “ Hey Google” on the first one and “Alexa” on the second.

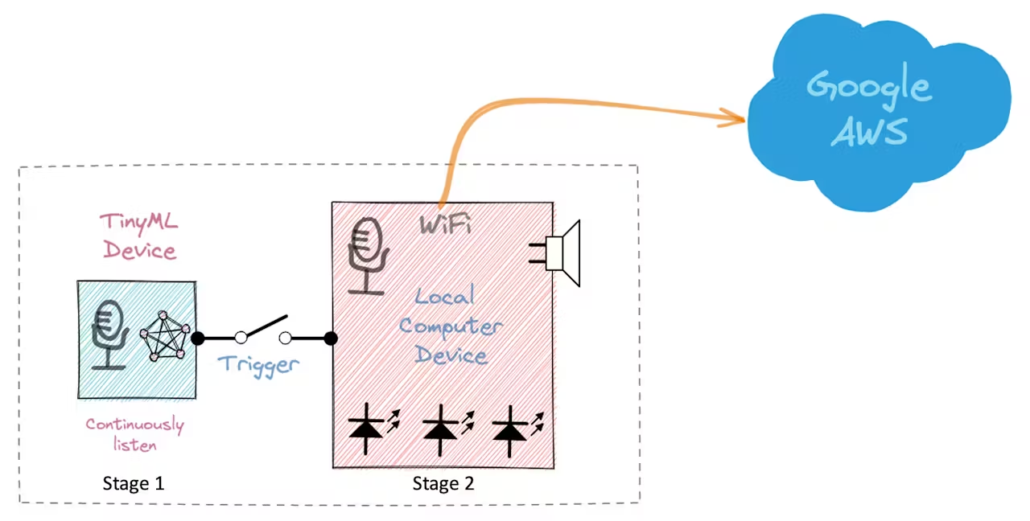

The whole process can be divided into two stages. Stage 1 involves using a TinyML model on a smaller microprocessor to continuously listen for a keyword, triggering Stage 2, the data is sent to the cloud and processed on a larger model when detected.

In this project, we will focus on Stage 1 and use the XIAO BLE Sense’s digital microphone to spot the keyword for the KWS application.

Hardware Introduction: The XIAO nRF52840 Sense is particularly well-suited for TinyML applications because it is equipped with a powerful 32-bit ARM Cortex-M4 processor and has 256KB of RAM and 1MB of flash memory. This makes it capable of running complex machine-learning algorithms, even with the limited resources of a microcontroller. In addition, the XIAO nRF52840 Sense features a range of sensors, including a microphone and accelerometer, which are essential for sound and motion recognition applications. It also has built-in Bluetooth and USB connectivity, which makes it easy to integrate with other devices and systems.

Basic Diagram of Keyword Spotting Project

- Dataset

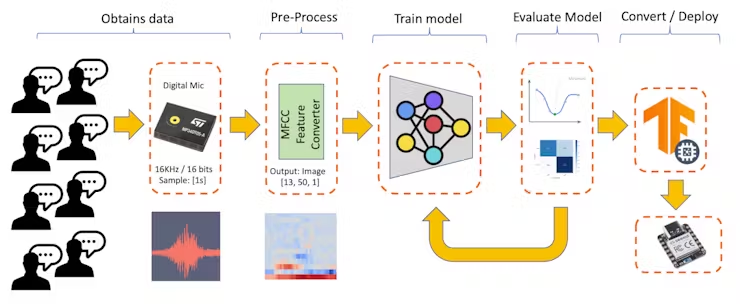

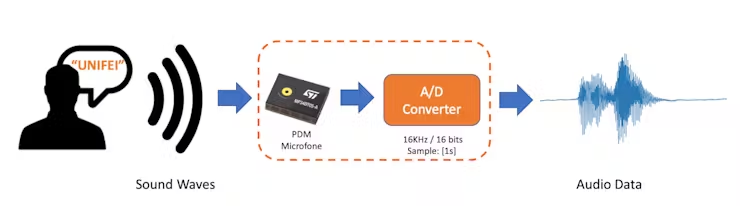

The dataset is a critical component of the Machine Learning Workflow, especially when working with sound classification. Since we’re dealing with audio data, it’s essential to sample the sound waves generated by the microphone at 16KHz with a 16bits depth. This specification can be met by various devices, including the XIAO BLE Sense, a computer, or a mobile phone.

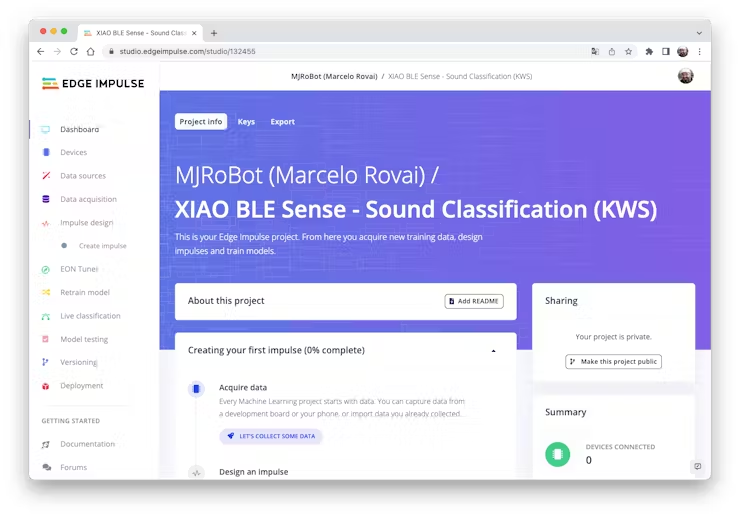

2. Capturing online Audio Data with Edge Impulse and a smartphone

In the TinyML Made Easy tutorial for anomaly detection and motion classification, we learned how to install and test our device using the Arduino IDE and connect it to Edge Impulse Studio for data capturing. However, capturing audio data using the Data Forwarder function is not possible due to the high sample frequency of 16KHz. Currently, the easiest way to capture audio data is to convert it to a WAV file and upload it with the uploader. Alternatively, audio data can be captured using a smartphone connected to Edge Impulse Studio online. Further guidance can be found in the Edge Impulse documentation and tutorials.

3. Capturing (offline) Audio Data with the XIAO BLE Sense

The easiest way to capture audio and save it locally as.wav file is using an expansion board for the XIAO family of devices, the Seeed Studio XIAO Expansion board. This expansion board enables to build of prototypes and projects easily and quickly, using its rich peripherals such as OLED Display, SD Card interface, RTC, passive buzzer, RESET/User button, 5V servo connector, and multiple data interfaces.

This tutorial will focus on classifying keywords, and the MicroSD card available on the device will be very important in helping us with data capture.

Click here to know detailed steps about how to Save recorded audio from the microphone on an SD card

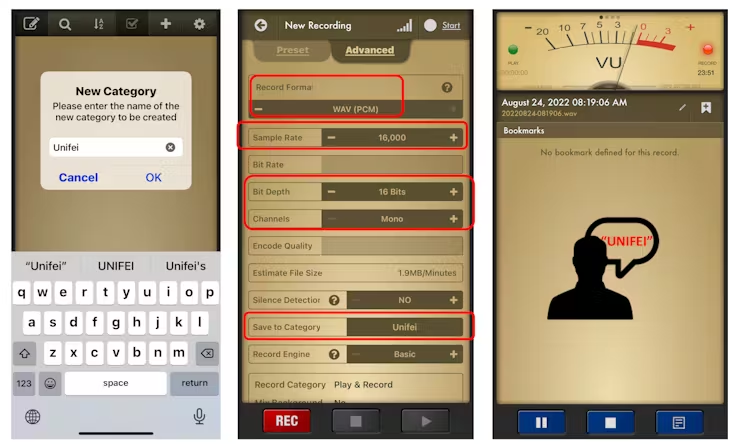

4. Capturing (offline) Audio Data with a smartphone or PC

Another option for capturing audio data is to use a PC or smartphone with a sampling frequency of 16KHz and a bit depth of 16 Bits. Voice Recorder Pro (IOS) is a good app for this purpose. The recorded audio can be saved as .wav files and sent to your computer for further use.

5. Training model with Edge Impulse Studio

This session will include the following steps: pre-processing using MFCC, model design and training, testing, deployment and inference, and post-processing. We won’t go into detail about these steps, but you can check Seeed’s wiki(Seeed Studio XIAO nRF52840 Sense Edge Impulse Getting Started

) or the original project tutorials on Hackster for more information on creating the impulse, pre-processing, and model definition.

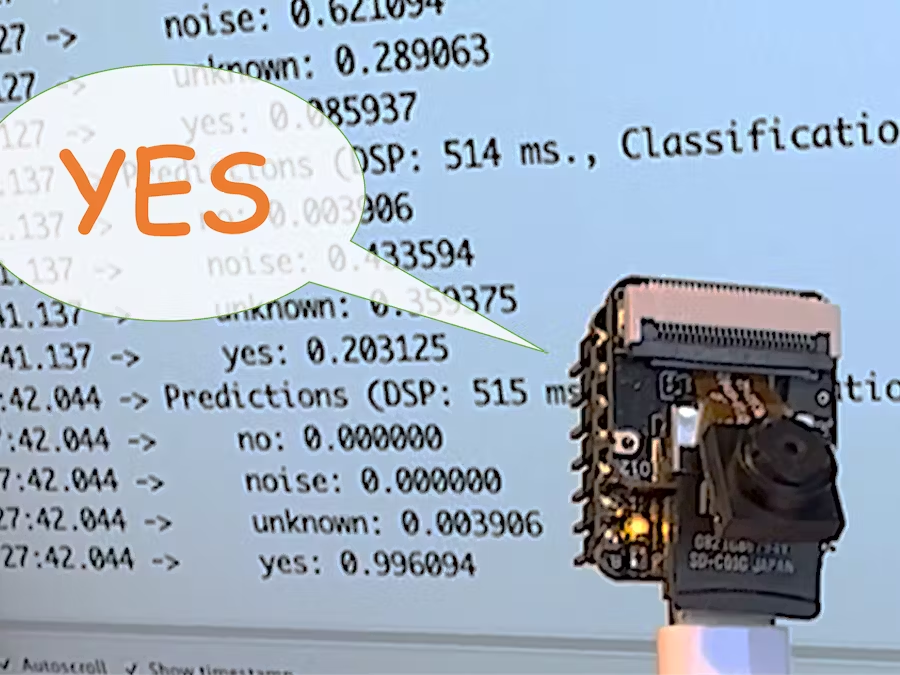

Example Two: TinyML Made Easy – Keyword Spotting (KWS) with XIAO ESP32S3

We continue exploring Machine Learning on the giant new tiny device of the Seeed XIAO family, the ESP32S3 Sense.

Hardware Introduction: The XIAO ESP32S3 Sense, powered by Espressif’s ESP32-S3 chip, is a compact and powerful microcontroller that offers a dual-core Xtensa LX7 processor, integrated Wi-Fi, and Bluetooth. Its balance of computational power, energy efficiency, and versatile connectivity make it an excellent platform for TinyML applications. With its expansion board, we gain access to the device’s “sense” features, including a 1600×1200 OV2640 camera, an SD card slot, and a digital microphone. These components, especially the integrated microphone and SD card, are essential in this project.

The project starts with an overview of the Machine Learning Workflow and the steps involved in creating an impulse, pre-processing, model definition, and training on XIAO ESP32S3 Sense. It shows how to use the Edge Impulse to capture and label audio data and train a machine learning model. Next, the project demonstrates how to use the XIAO ESP32S3 and the Edge Impulse firmware to collect audio data and perform real-time keyword spotting. The project includes a detailed explanation of the code and the steps involved in deploying and testing the model on the microcontroller. Finally, the project shows how to store the audio data on an SD card and perform post-processing tasks to analyze and visualize the data. The project concludes with a discussion of the potential applications of Keyword Spotting and Machine Learning on microcontrollers and provides resources for further learning.

TinyML in Image Classification

Image classification is a type of computer vision task that involves categorizing images into predefined classes or categories. The goal of image classification is to teach a machine-learning model to recognize and distinguish between different objects or patterns within images.

In image classification, a machine learning model is trained on a dataset of labeled images, where each image is associated with a particular class or category. The model learns to identify and extract features from the images that are relevant to the classification task, such as shapes, colors, or textures.

Once the model is trained, it can be used to predict the class or category of new, unlabeled images. The model analyzes the features of the new image and compares them to the features of the images in the training dataset to make a prediction.

Image classification has many applications, including object recognition, facial recognition, and medical diagnosis. It is a fundamental task in computer vision and is used in a wide range of industries, from manufacturing to healthcare to entertainment.

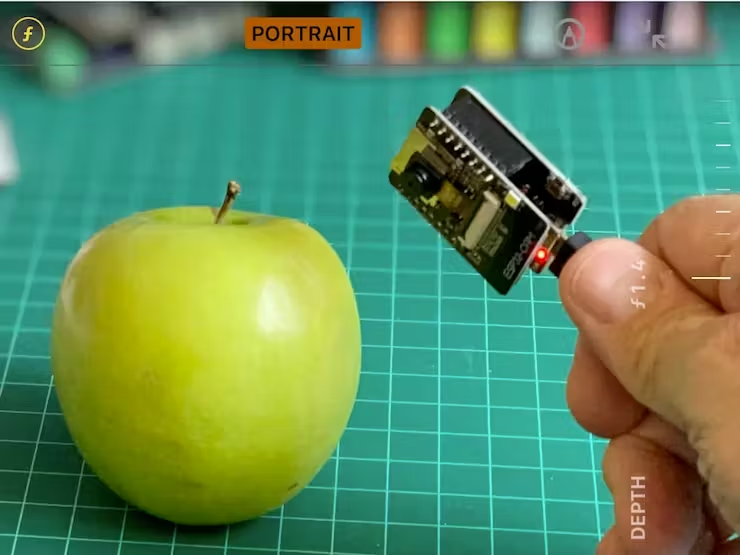

Example One: TinyML Made Easy: Image Classification on XIAO ESP32S3 Sense

Hardware Introduction: We recently released the XIAO ESP32S3 Sense, an affordable development board that combines embedded ML computing power and photography capability. The board with 8MB PSRAM, and 8MB FLASH integrates a camera sensor, digital microphone, and SD card support, making it a great tool for developers looking to start with TinyML (intelligent voice and vision AI). You can easily prototype and deploy intelligent vision and voice applications, such as image classification and object detection.

The whole project is divided into the following steps:

- Installing the XIAO ESP32S3 Sense on Arduino IDE

- Testing the board with BLINK

- Connecting Sense module (Expansion Board)

- Microphone Test

- Testing the Camera

- Testing WiFi

- Fruits versus Veggies – A TinyML Image Classification Project

- Training the model with Edge Impulse Studio

- Testing the Model (Inference)

- Testing with a bigger model

TinyML in Motion Classification/Anomaly Detection

TinyML can be used in motion classification and anomaly detection to enable real-time analysis of motion data from sensors, such as accelerometers and gyroscopes. Motion classification involves categorizing motion data into predefined classes or categories, while anomaly detection involves identifying abnormal or unexpected patterns of motion.

One example of motion classification using TinyML is in the analysis of human activities, such as walking, running, or jumping. In this application, a machine learning model is trained on a dataset of labeled motion data, where each motion is associated with a particular activity class. The model learns to identify and extract features from the motion data that are relevant to the activity classification task, such as frequency, amplitude, or phase.

Once the model is trained, it can be used to predict the activity class of new, unlabeled motion data in real time. The model analyzes the features of the new motion data and compares them to the features of the motion data in the training dataset to make a prediction.

Anomaly detection using TinyML involves training a machine learning model to recognize normal patterns of motion and identify abnormal or unexpected patterns. This can be useful in applications such as predictive maintenance, where abnormal motion patterns can indicate the presence of a fault or defect.

To use TinyML in motion classification and anomaly detection, a microcontroller with sensor input capabilities is required, along with a machine learning model optimized for the microcontroller environment. Techniques such as quantization, pruning, and compression can be used to reduce the size of the model and optimize its performance for the microcontroller.

Overall, TinyML in motion classification and anomaly detection can enable real-time analysis of motion data from sensors, providing valuable insights and enabling a wide range of applications in areas such as healthcare, sports, and industrial automation.

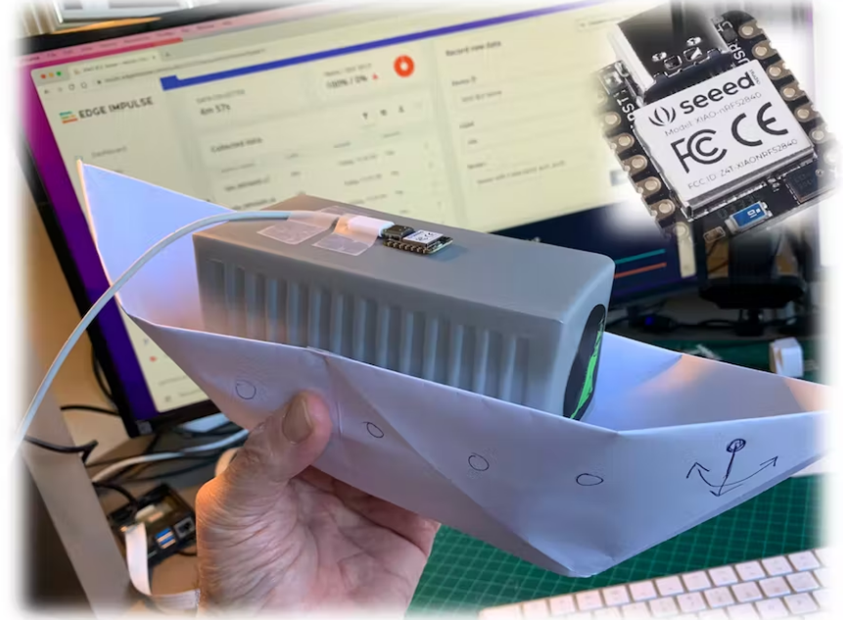

Example One: Anomaly Detection & Motion Classification for Containers in Transportation by XIAO nRF52840 Sense

Background: Cargo damage is a common occurrence during transit, caused by factors such as dropping, rolling, breakages, or being knocked during handling. Careless movement of mechanical handling equipment and inattention to weight loads and lifting gear are two common causes of cargo damage. Correct pallet packing techniques and proper truck loading techniques can help prevent these issues.

Solution: Anomaly detection tool powered by XIAO nRF52840 Sense

To prevent cargo damage, an anomaly detection tool powered by XIAO nRF52840 Sense can quickly detect anomalies and trigger relative measures. This tool can protect the interests of cargo owners and avoid unnecessary losses during transportation. The device can identify the transportation history of a specific container by splitting its journey into typical situations such as maritime, terrestrial, lift, and idle. Anomalies are detected if a container is in situations that are not typical, such as unexpected handling or storage conditions. Staff can then take relative measures to check whether any emergency has occurred.

Why XIAO nRF52840 Sense: Low-cost and intelligent with TinyML

Marcelo Rovail chose TinyML and the XIAO nRF52840 Sense microcontroller to create this gadget. Microcontrollers are low-cost electronic components designed to use tiny amounts of energy and can be found in almost any consumer, medical, automotive, or industrial device. TinyML is a new technology that uses microcontrollers and machine learning algorithms to extract meaning from sensor data. With the rise of the Internet of Things (IoT), the data generated by microcontrollers is increasing, but much of it remains unused due to the high cost and complexity of data transmission. TinyML enables machine intelligence on these devices, using very little power, to interpret more of the sensor data.

The Seeed Studio XIAO nRF52840 Sense features onboard IMU and PDM and can measure and report the specific gravity and angular rate of an object to which it is attached. It also carries Bluetooth 5.0 wireless capability and operates with low power consumption, making it an ideal platform for this type of application.

Overall, the anomaly detection tool powered by XIAO nRF52840 Sense is an innovative solution to prevent cargo damage during transit. It combines the power of TinyML and microcontrollers to enable real-time analysis of sensor data and trigger relative measures to prevent cargo damage.

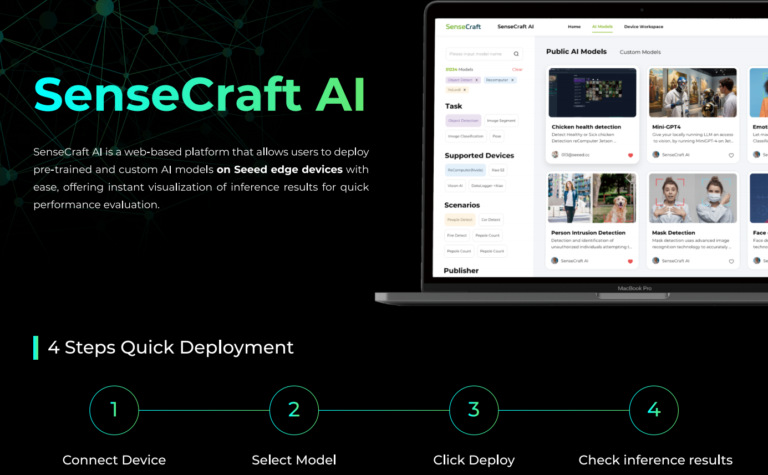

Introducing SenseCraft AI

SenseCraft AI is a platform that enables easy AI model training and deployment with no-code/low-code. It supports XIAO ESP32S3, Grove Vision AI Module v2 and reComputer Jetson at this moment, ensuring complete adaptability of the trained models to these products. Moreover, deploying models through this platform offers immediate visualization of identification results on the website, enabling prompt assessment of model performance.

Ideal for tinyML applications, it allows you to effortlessly deploy off-the-shelf or custom AI models by connecting the device, selecting a model, and viewing identification results.

TinyML enables the development of intelligent, low-power, and cost-effective embedded systems and IoT devices that can perform sound, image, and motion classification tasks. This opens up new opportunities for a wide range of applications, from smart home devices to healthcare to industrial automation.

Explore more TinyML stories:

- Empowering Travel Safety with XIAO ESP32S3 Sense, Round Display for XIAO, and TinyML: AI-Driven Keychain Detection for Instant Alerts and Location Request

- Snake Recognition System: Harnessing LoRaWAN and XIAO ESP32S3 Sense for TinyML

- NMCS: Empowering Your Coffee Experience with Sound and Vision Classification

- IoT-Enabled Tree Disease Detection: Harnessing Vision AI, Wio Terminal, and TinyML

- Revolutionizing Wildlife Monitoring: TinyML, IoT, and LoRa Technologies with XIAO ESP32S3 Sense and Wio E5 Module

- Innovative Community Projects That Utilized Grove-Vision AI Module: 11 Inspiring Stories