Seeed Studio XIAO Based AI Gadget Reference Designs

By Kezang Loday 5 months agoThe Seeed Studio XIAO Series is redefining what’s possible with edge AI—powering everything from voice-controlled wearables to smart microscopes in compact, low-power designs. In this blog, we explore over 25 open-source AI gadget projects and ready-to-use devices powered by XIAO, showing how makers are turning real-time intelligence into reality across vision, speech, and motion.

AI at the edge is changing how we interact with the world—enabling real-time recognition, voice assistants, and intelligent automation in ultra-compact, energy-efficient designs. With onboard camera or microphone support, compatibility with TensorFlow Lite, Edge Impulse, and SenseCraft AI, the Seeed Studio XIAO Series has become a go-to choice for building smart, connected devices that run AI locally.

In this blog, we’ve collected 25+ inspiring AI gadget reference designs powered by XIAO—from distraction-reducing study lamps, wearable voice note taker and wearable nutrition trackers to ChatGPT-powered companions and self-balancing robots. Whether you’re prototyping voice-controlled toys, computer vision assistants, or AI-powered wearables, these projects showcase what’s possible with embedded intelligence—right on the microcontroller.

Creator: abe’s projects

Powered by: XIAO ESP32S3 Sense

This real-life Pokédex combines AI voice cloning, computer vision, and custom 3D printing to recognize Pokémon in the physical world. Driven by the XIAO ESP32-S3 Sense, it identifies toys and figures using a built-in camera and responds with synthesized Pokémon-style narration. The project creatively blends nostalgia with cutting-edge tech, turning a fictional gadget into a functional reality. While not perfect, it showcases how embedded AI can deliver interactive experiences straight out of fantasy. See the full build and demonstration on YouTube.

Creator: Arpan Mondal

Powered by: Grove Vision AI Module V2, XIAO ESP32S3

This project turns a study lamp into a distraction-aware tool that reacts when a phone is detected. Using a Grove Vision AI module trained to spot smartphones, the lamp shifts from warm white to deep red light when a phone appears in view—encouraging users to refocus. The system, built with a WS2812B LED strip and controlled by XIAO ESP32S3, responds to AI recognition and reverts to warm lighting when the phone is removed. Step-by-step instructions include AI model setup, Arduino programming, custom lamp assembly, and LED integration. You can find the complete project details on Instructables.

Creator: ChromaLock

Powered by: XIAO ESP32C3

This DIY device transforms a standard TI-84 calculator into a stealthy test-day assistant by connecting it to the internet and bypassing anti-cheating mechanisms. The mod allows access to AI tools like ChatGPT, essentially turning the calculator into a covert knowledge hub. While clearly intended as satire, the project is a bold exploration of hacking, microcontroller integration, and ethical gray areas in academic tech. It highlights the inventive potential (and real risks) of modifying everyday tools with embedded connectivity. Watch the full breakdown on YouTube and get resources from GitHub.

Creator: Lucidbeaming

Powered by: Grove Vision AI Module V2, XIAO ESP32C3

This creative project transforms object recognition into generative music, using a custom-trained YOLOv5 model to identify plastic skulls and teeth via the Grove Vision AI Module V2. Data is passed to a Raspberry Pi via MQTT, triggering sounds through FluidSynth. The build features ESP32 control, a custom AI model trained on 176 manually processed images, and integration with Mosquitto for communication. Training guidance includes annotation strategies and model conversion in Roboflow and Google Colab. While documentation was challenging, the project showcases possibilities in human-machine artistic interfaces. You can find the complete project details here.

Creator: Christopher Pietsch

Powered by: XIAO RP2040

Transferscope is a handheld device that captures real-world textures and patterns and instantly applies them to new images using Stable Diffusion. Built with a Raspberry Pi Zero 2, HyperPixel screen, and a single-button XIAO RP2040 controller, it offers tactile AI prompting without complex inputs. The device detects edges, sends data to a GPU server, and uses Kosmos-2 for interpretation and Stable Diffusion for synthesis—all within a second. Designed for spontaneity and creativity, it’s housed in a custom 3D-printed case with a camera and Li-ion battery. Future upgrades may include accelerometers, haptics, and metal casing. You can find the complete project details on our blog.

Creator: Bruno Santos

Powered by: XIAO ESP32S3 Sense, XIAO Round Display

This whimsical project turns voice recognition into a treat dispenser. Built around an XIAO ESP32S3 Sense and a XIAO Round Display, the device records a 5-second phrase and sends it to a remote server. Using Whisper for transcription and BART-MNLI for sentiment classification, it decides whether to dispense candy based on whether the phrase is positive about candy. The setup includes 3D-printed housing, Arduino code, and Docker-based backend options for GPU or CPU, with full deployment instructions. You can find the complete project details on GitHub.

Creator: Jacob Trebil

Powered by: XIAO ESP32S3 Sense

Vibe is a sleek, pendant-sized wearable that uses AI and computer vision to passively track food intake. Built with the compact XIAO ESP32S3 Sense, it captures images during meals and sends them via Bluetooth to a mobile app, where Vision LLMs analyze food types and log nutrition. Designed for frictionless habit-building, it avoids interruptions and supports encrypted data handling. Vibe v2 will be smaller and more energy-efficient, with planned additions like hydration tracking and workout detection. You can find the complete project details on our blog.

Creator: Michael Merrifield

Powered by: XIAO ESP32S3

MiniMe is a voice-activated AI companion that mimics its creator using speech recognition, personalized ChatGPT responses, and voice cloning. Housed in a tiny 3D-printed box, it transcribes speech via OpenAI’s Whisper, enriches queries with biographical context, and replies using ElevenLabs voice synthesis. All audio I/O is handled through I2S modules and a 3W speaker, powered entirely by the XIAO ESP32S3. Check out our blog for more details.

Created by: Cathy Mengying Fang, Patrick Chwalek, Quincy Kuang, and Pattie Maes from MIT Media Lab

Powered by: XIAO ESP32S3 Sense, XIAO Round Display

WatchThis is a gesture-based wearable assistant that combines computer vision and GPT-4o to answer questions about real-world objects. Users flip up the wrist-mounted camera, point at something, and tap the screen—triggering image capture and contextual queries like “What is this?” or “Translate this.” The system runs on Arduino-compatible C++ and delivers on-screen responses within 3 seconds, with customizable prompts via a built-in WebApp. Applications include real-time translation, navigation, and smart home control, making it ideal for travelers and accessibility solutions. Check out the full details on our blog here.

Creator: Cayden Pierce

Powered by: XIAO ESP32S3, XIAO Round Display

uPhone is a necklace-style AI device that displays real-time translation, navigation, and shared information outward to conversation partners. It features a round RGB touchscreen and a gesture-controlled glove powered by XIAO ESP32S3, using magnets and hall effect sensors for app switching via BLE. Built to foster connection and reduce tech isolation, uPhone transforms face-to-face conversations into collaborative, informed experiences. Click here for more details.

Created by: Cayden Pierce, Wazeer Deen Zulfikar, and Pattie Maes from MIT Media Lab

Powered by: XIAO nRF52840 Sense

MeMic is a wearable voice recorder that activates its microphone only when the wearer speaks, ensuring privacy in continuous audio logging. It detects speech through vibrations captured by the onboard IMU, processed locally on the XIAO nRF52840 Sense using a custom algorithm that filters motion signals and triggers recording when voice-like acceleration is detected. A RGB LED indicator lights up during active recording, providing transparency. Tested in pendant and smart glasses form factors, MeMic aims to make AI wearables more socially acceptable by mitigating always-on listening concerns. Check out our blog for more details.

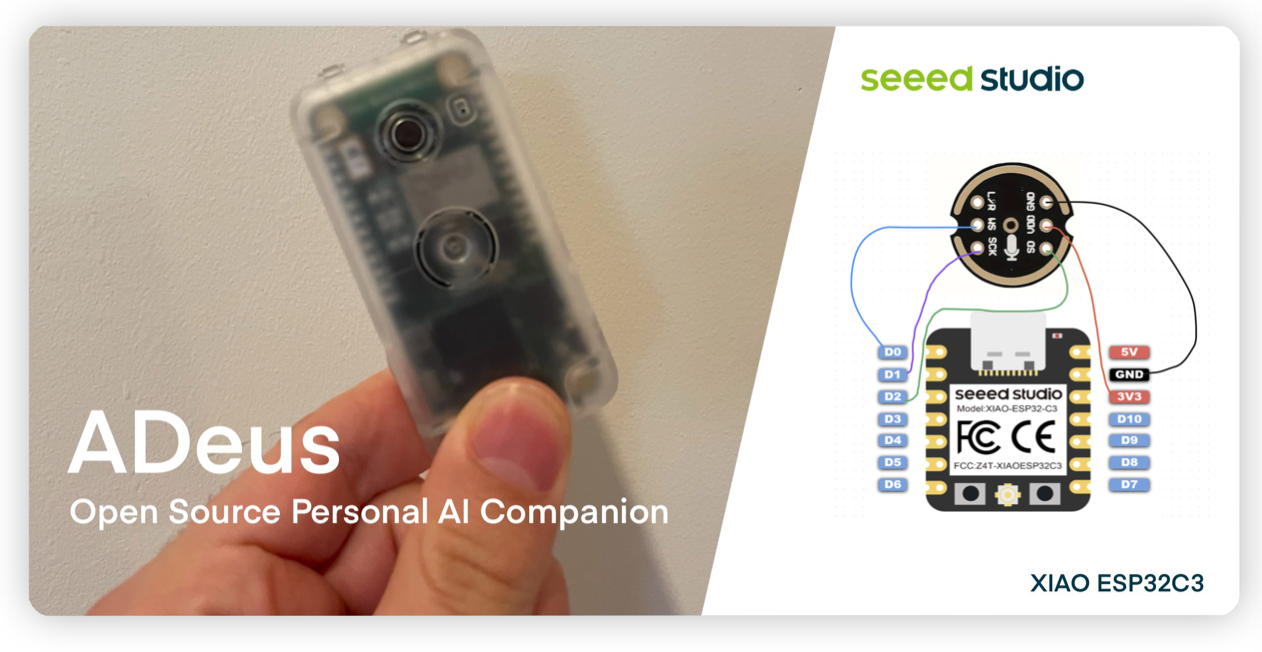

Created by: Adam Cohen Hillel & ADeus Community

Powered by: XIAO ESP32C3

ADeus is a privacy-focused AI assistant that captures user audio via a wearable device and provides context-aware responses through a mobile/web app. Built around the XIAO ESP32C3 and INMP441 mic, it stores transcripts securely using Supabase, an open-source Firebase alternative. The AI runs personalized interactions with large language models while ensuring full user control over data. Hardware setup includes soldering a mic and battery to the XIAO board, followed by configuration using ESP-IDF and Arduino, with support for iOS and Android. ADeus supports builds on Coral AI, Raspberry Pi Zero W, and the ESP32C3. You can find the complete project details on our blog.

Created by: Nik Shevchenko & Based Hardware

Powered by: XIAO ESP32S3 Sense

OpenGlass transforms any ordinary glasses into smart eyewear using affordable off-the-shelf components. It features a XIAO ESP32S3 Sense housed in a 3D-printed mount, alongside a compact Li-ion battery, enabling capabilities like life recording, people recognition, object identification, and text translation. These functions are powered by software that connects to Groq and OpenAI APIs. Fully open-source and the project supports community contributions. For more details check out our blog here.

Creator: Nik Shevchenko and Based Hardware

Powered by: XIAO nRF52840 Sense

Friend is an AI-powered voice recorder that captures conversations hands-free and transcribes them in real time. It uses onboard TinyML on the XIAO nRF52840 Sense to detect speech and sends audio via Bluetooth to a mobile app. There, Deepgram and OpenAI Whisper handle transcription, producing accurate, time-stamped summaries and task lists. Compact and energy-efficient, the device features a rechargeable battery and switch for daily wear. With an open-source design and step-by-step build guide, users can customize and contribute via GitHub. Check out our blog for more details.

Creator: Ralph Yamamoto

Powered by: Grove Vision AI Module V2, XIAO ESP32S3 Sense

This project uses two AI-enabled cameras to detect and photograph hummingbirds at a feeder. The Grove Vision AI V2 performs object detection, while the XIAO ESP32S3 Sense captures and stores images on an SD card, acting as a Wi-Fi-enabled web server for remote access. The system is housed in a 3D-printed mount attached to a pole and powered by a 2000mAh USB battery bank. The creator developed a custom hummingbird model using Edge Impulse and Kaggle data, then deployed it via SenseCraft AI. While the custom model works with SenseCraft AI’s preview, it has compatibility issues with Seeed’s SSCMA library, requiring further troubleshooting. You can find the complete project details here.

Creator: Billtheworld

Powered by: XIAO nRF52840 Sense

This demo blends voice recognition and animated displays using embedded ML and XIAO BLE Sense boards. Trained with Edge Impulse, the onboard PDM mic identifies keywords like “HuTao” or “shake,” activating a toy via relay or triggering animated GIFs on a 0.66″ OLED screen. Images are converted into byte arrays and rendered in real time. Voice data is collected as WAV files and processed into a lightweight classifier model deployed directly to XIAO. The project also uses 3D-printed toy components and offers Arduino code for speech-triggered display and servo control. You can find the complete project details on Hackster.io.

Creator: Md. Khairul Alam

Powered by: XIAO ESP32S3 Sense

Third Eye is a vision-to-speech aid for visually impaired users, combining edge AI and object detection to describe surroundings through headphones. Using a custom-trained Edge Impulse model, the XIAO ESP32S3 Sense detects everyday objects and sends results via UART to a Raspberry Pi, which converts them to spoken feedback using Festival TTS. The setup includes Arduino library deployment, camera pin remapping, and speech-driven alerts like “chair on the left” or “bed ahead”—all optimized for low-cost accessibility. You can find the complete project details here.

Creator: Makestreme

Powered by: XIAO ESP32S3, Grove Vision AI Module V2

This quirky AI-powered scarecrow uses computer vision to detect pigeons and trigger a high-frequency noise to drive them away. The Grove Vision AI Module V2 is trained with a wild bird detection model via SenseCraft, while the XIAO ESP32 generates randomized tones between 2kHz–20kHz through a PAM8403 amplifier and 3W speaker. The system is embedded inside a hardboard scarecrow cutout, positioning the camera behind one eye and speaker inside the hat. Powered through carefully soldered connections, it responds only to bounding box detections of nearby birds, helping reduce false alarms. Results were mixed—while pigeons initially scattered, they gradually adapted. Click here for the complete project details.

Creator: Seeed Studio

Powered by: XIAO nRF52840 Sense

This video showcases a TinyML speech recognition demo using the XIAO BLE nRF52840 Sense with Edge Impulse. The ultra-compact setup enables local voice command detection, featuring onboard sensors and BLE connectivity for real-time response. Optimized for low-power applications, it’s perfect for voice-controlled devices, smart wearables, and hands-free interfaces. The demo highlights just how accessible embedded AI has become, making speech recognition viable even on small, battery-powered microcontrollers. Watch the quick demo on YouTube.

Creator: Seeed Studio

Powered by: XIAO ESP32S3 Sense, XIAO Round Display

This super-compact voice assistant uses the onboard microphone of the XIAO ESP32-S3 Sense to capture speech, which is then transcribed via Google Cloud’s speech-to-text API and processed by ChatGPT for real-time conversational feedback. Answers and transcriptions are displayed on a circular screen, creating a tiny yet responsive voice interface. This fusion of embedded AI and cloud services demonstrates how ultra-portable devices can deliver intelligent interactions—perfect for smart home control, on-the-go assistants, or wearable tech. Watch the full demo on YouTube.

Creator: CiferTech

Powered by: XIAO nRF52840 Sense

This voice-controlled robot uses the onboard microphone of the XIAO nRF52840 Sense to interpret spoken commands via the micro_speech library. Once recognized, the commands are displayed on a vintage screen while the robot responds in real time—demonstrating a simple but effective application of offline voice recognition. The project highlights the potential of low-power embedded AI in educational robotics and interactive design. Watch the full demonstration on YouTube.

Creator: IoT HUB

Powered by: XIAO ESP32C3

This compact AI terminal brings Google Gemini responses to a 0.96″ OLED screen using minimal hardware and clean formatting. Built with the XIAO ESP32C3, it connects to Wi-Fi and uses HTTPClient to send user queries—typed via Serial Monitor—to Gemini’s public API. Replies are parsed with ArduinoJson and displayed three lines at a time with button-controlled vertical scrolling. UI touches include animated splash screens, smooth word-wrap, and a loading animation. Users can expand the build with voice input modules, SD card history storage, or multitasking menus. You can find the complete project details on Hackster.io.

Ready-to-Use AI Gadgets Powered by Seeed Studio XIAO

Looking to skip the wiring and dive straight into AI development? These plug-and-play gadgets powered by the XIAO Series are designed for fast deployment in edge AI, automation, and scientific applications.

A compact AI vision device featuring the Grove Vision AI V2, XIAO ESP32-C3, and OV5647 camera. Built on the Himax WiseEye2 platform with support for TensorFlow, PyTorch, and Arduino IDE. Easily deploy models and visualize inference using SenseCraft—perfect for quick AI prototyping.

An AI-powered edge device with camera, mic, and speaker, built on ESP32S3 and WiseEye2. With the LLM-ready SenseCraft suite, it sees, listens, and responds—ideal for smart automation and contextual AI interactions.

A modular AI microscope built with XIAO ESP32S3 Sense, offering features like autofocus, timelapse, and onboard inference. Co-created with openUC2, it brings smart imaging to classrooms, labs, and makerspaces.

Start Building AI-Powered Gadgets with XIAO

From TinyML to real-time computer vision, the Seeed Studio XIAO Series enables makers to bring advanced AI features into everyday devices—no bulky systems or always-on cloud required. With full support for Edge Impulse, TensorFlow Lite, PyTorch, and Arduino IDE, and a wide range of plug-and-play peripherals, XIAO makes it easier than ever to build fast, efficient, and creative AI prototypes.

Explore More

Have a project idea or question? Reach out to us at [email protected]—we’re always excited to hear from makers and developers like you!

Other Reference Designs for Seeed Studio XIAO

Last but not least, for makers and developers who are looking for project inspirations, we’ve curated several similar project collections based on the applications. Feel free to check out to get inspired for your next builds.

Notes at the end.

Hey community, we’re curating a monthly newsletter centering around the beloved Seeed Studio XIAO. If you want to stay up-to-date with:

🤖️ Cool Projects from the Community to get inspiration and tutorials

📰 Product Updates: firmware update, new product spoiler

📖 Wiki Updates: new wikis + wiki contribution

📣 News: events, contests, and other community stuff

Please click the image below👇 to subscribe now!