Seeed's AI Cameras and AI Computers

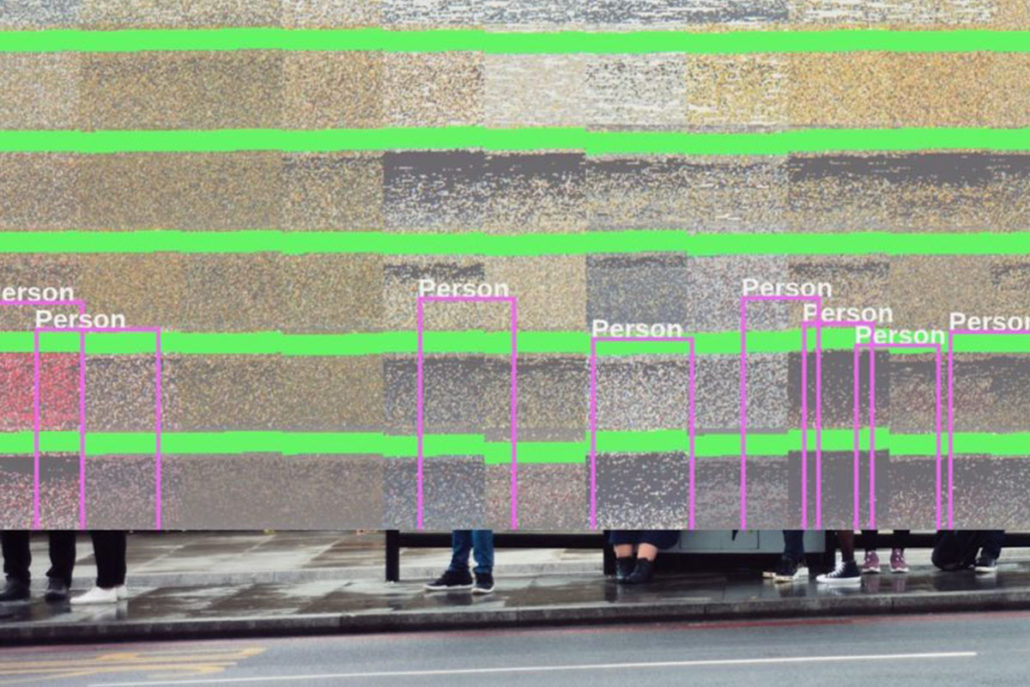

Right Tools for Every Stage of Your Vision AI Pipeline

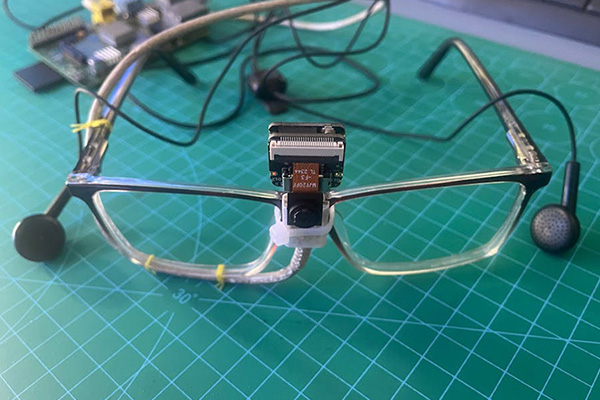

AI Cameras Reference Design

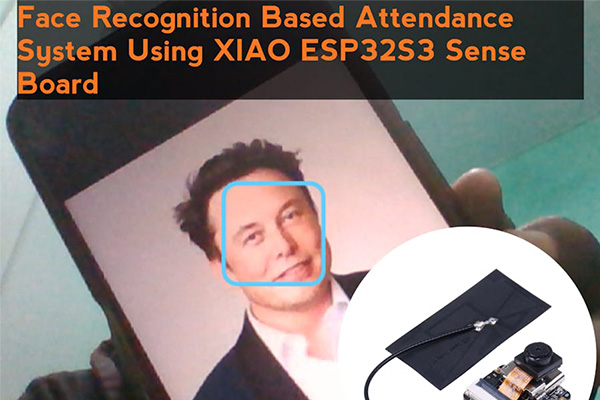

Ultra-Efficient at 0.05 – 1 TOPS

XIAO ESP32-S3 Sense, Tiny but Powerful to Run tinyML Models

Integrated Wireless Connectivity

A mini-size vision AI camera and microphone dev board, featuring ESP32-S3 32-bit, dual-core, Xtensa processor with integrated Wi-Fi®/Bluetooth®.

Multi-Framework Dev Support

With TensorFlow and PyTorch frameworks supported, and compatibility with Arduino IDE and MicroPython, it’s a developer-friendly tool for building your wireless vision AI sensor that detects multiple scenes.

XIAO Vision AI Camera, Dual MCUs for Dedicated AI & Connectivity

Cutting-Edge Hardware Integration

A smart vision solution featuring a Himax WiseEye2 powered Grove Vision AI V2 and OV5647 camera for Vision AI processing, and a seperate XIAO ESP32-C3 MCU for wireless Wi-Fi connectivity.

Developer-Friendly AI Ecosystem

It supports TensorFlow and PyTorch via Arduino IDE, and offers no-code deployment and real-time inference visualization through SenseCraft AI, suitable for all skill levels to build AI vision applications.

reCamera Series:

Open–source AI Camera + Motion Control

reCamera series merges a 1TOPS camera (5MP, YOLO11) with a 360° 2-axis gimbal. Compact, modular, and ready for real-time tracking or custom AI in robotics, drones, or surveillance.

SenseCAP A1102 – for Indoor and Outdoor Sensing

Rugged Low-Power Edge AI Solution

An IP66-rated LoRaWAN® Vision AI Sensor, ideal for low-power, long-range TinyML Edge AI applications.

High-Res Vision with Secure LoRaWAN™ Transmission

It features a 480×480 resolution, 10 FPS, 863MHz-928MHz, and battery power supply, sending only the reference results via LoRaWAN, fully respecting privacy and ensuring security.

SenseCAP Watcher – The Physical AI Agent for Smarter Spaces

Power-Packed Edge AI Processing

A vision and voice AI agent built on the ESP32S3, incorporating the Himax WiseEye2 HX6538 AI chip with Arm Cortex-M55 and Ethos-U55 cores, excelling in image and vector data processing.

LLM-Enabled Multimodal Perception System

Equipped with a camera, microphone, and speaker, SenseCAP Watcher can see, hear, and talk. Plus, with the LLM-enabled SenseCraft suite, SenseCAP Watcher understands your commands, perceives its surroundings, and triggers actions accordingly.

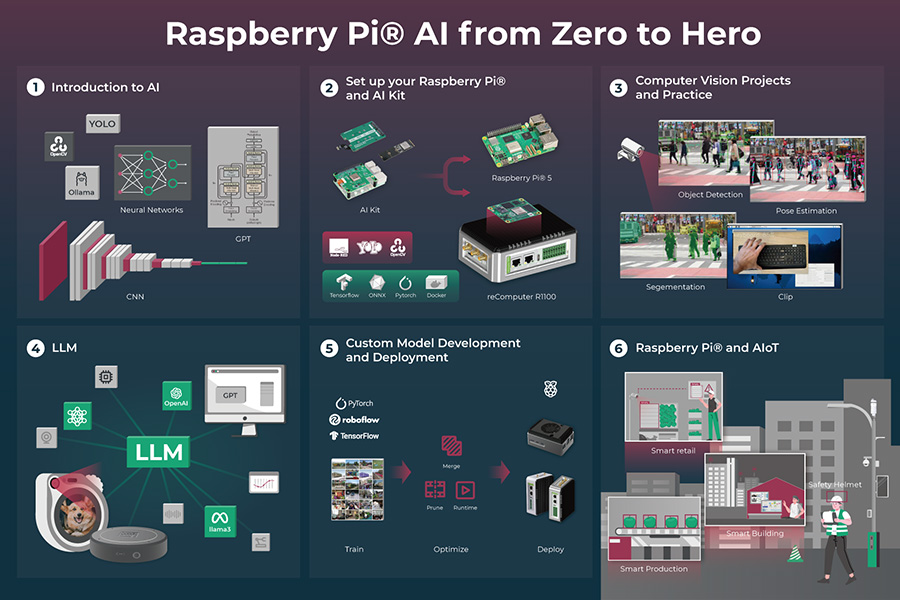

Basic Vision AI Computers

Mid-Tier Compute at 4 – 32 TOPS

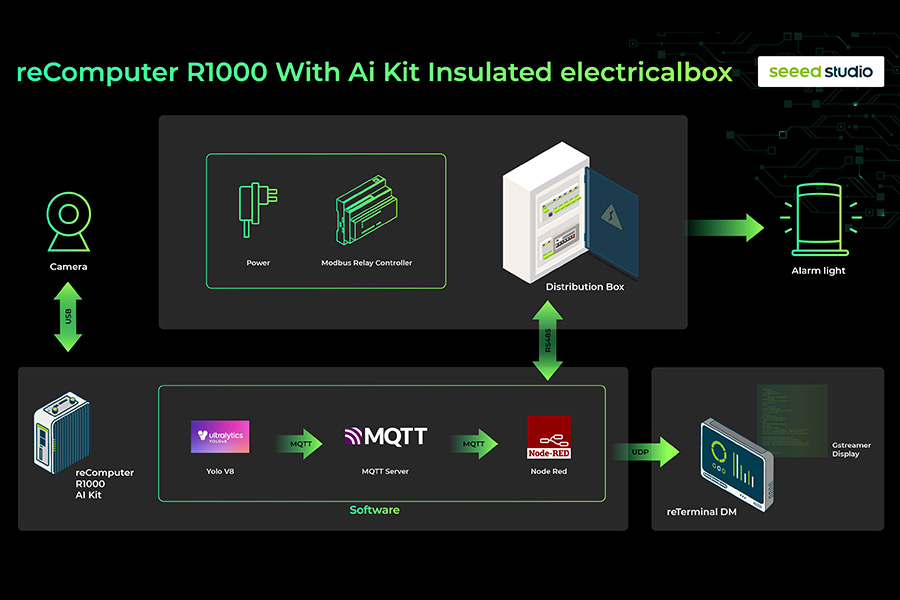

reComputer Industrial AI R

High-Performance Edge AI Acceleration

- Raspberry Pi CM5 (up to 16 GB) with a 26 TOPS Hailo 8 accelerator

- Offer a prebuilt model zoo and toolchain

Industrial-Grade Robustness for 24/7 Operation

With 9- 36V wide voltage input, hardware watchdog, and robust cooling, it operates stably at -20°C~65°C for 24/7 reliability, ideal for smart factories, surveillance, and AIoT with powerful edge AI.

reComputer AI R

High-Performance Edge AI Integration

- Raspberry Pi 5 (up to 16 GB) with a 26 TOPS Hailo 8 accelerator

- Offer a prebuilt model zoo and toolchain

Tailored for AI Vision & Edge Computing

- 4K 60fps H.265 decoder support 2-8 real-time (720p) multi-stream inferences

- Verstile Connectivity with 2 x HDMI, 1 x Gigabit Ethernet and 2 x USB3.0

Accelerated Edge AI Computers

High-Performance at 34 – 375 TOPS

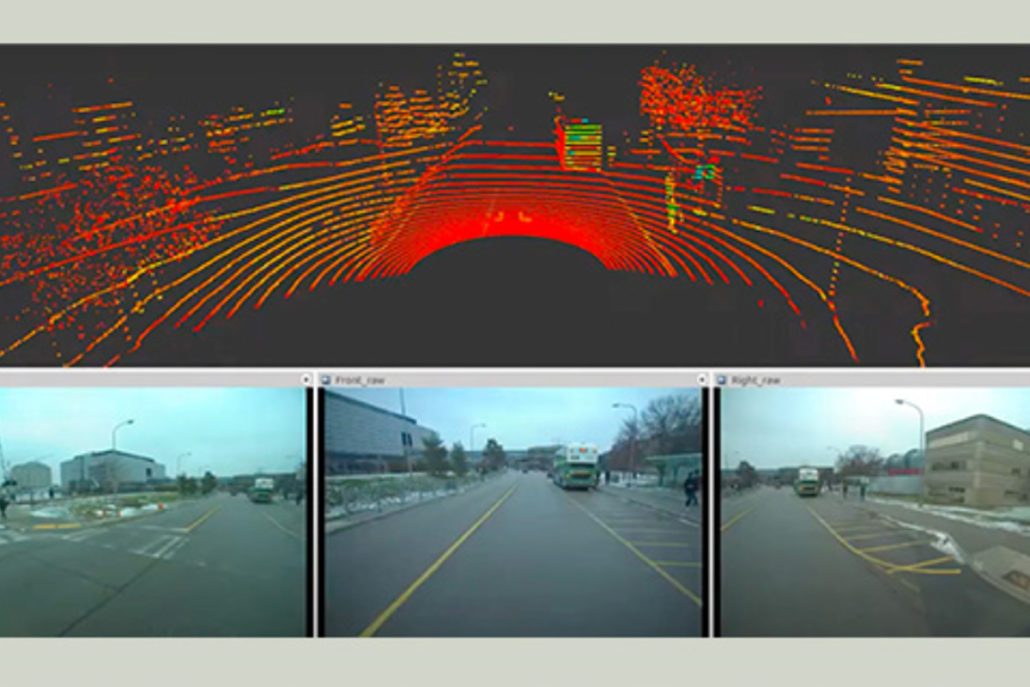

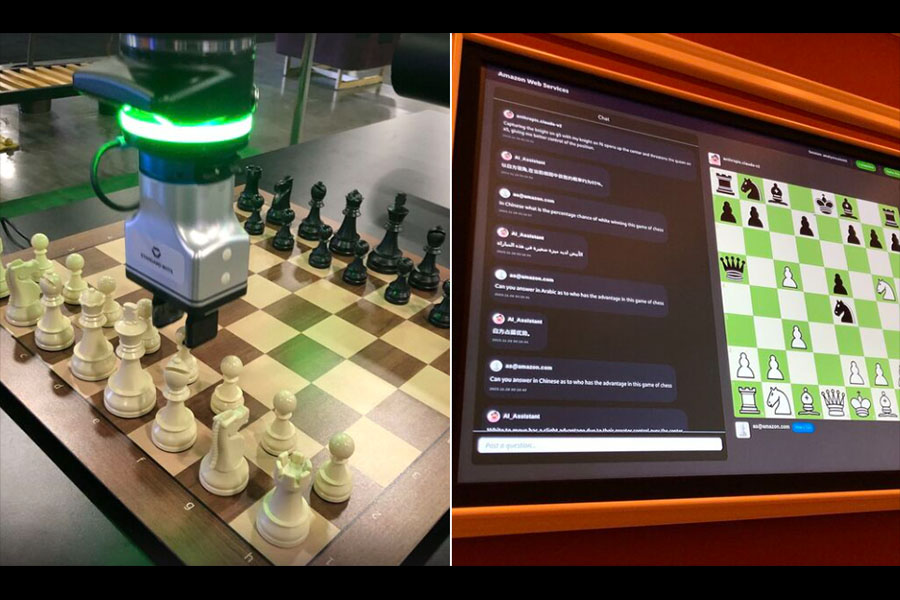

Ultra-High-Performance Multimodal Perception

- Designed for Jetson AGX Orin 32GB/64GB, up to 275 TOPS AI performance

- 8K60 and 3x4K60 video decoding supported

- 16-lane MIPI CSI-2 | up to 8 GMSL extension

The reServer Industrial J501 carrier board enables advanced vision AI for autonomous machines—even supporting humanoid robot development with enhanced environmental perception and motion control.

Supercharging Jetson Orin Nano/Orin NX device with up to 1.7x AI perf

- Deliver up to 157 TOPS AI performance, while efficiently handle 40W heat dissipation needs by hybrid cooling

- Dual Ethernet, 4× USB 3.2, CSI, CAN

- Rubust operation between -20~ 60°C in MAXN mode

The reComputer Jetson Super series leverage video analytics in complex scenario/huge data processing in ease, able to deploy generative AI large models at the edge, and deliver advanced robot development inferencing power.

Local AI Inference Center for Multi-streaming Process

- Compatible with Jetson Orin Nano/Orin NX, up to 100 TOPS AI perf

- 2x 2.5-inch drive–bays to expand storage

- 1xRS232/RS422/RS485, 4xDI/DO, 1xCAN

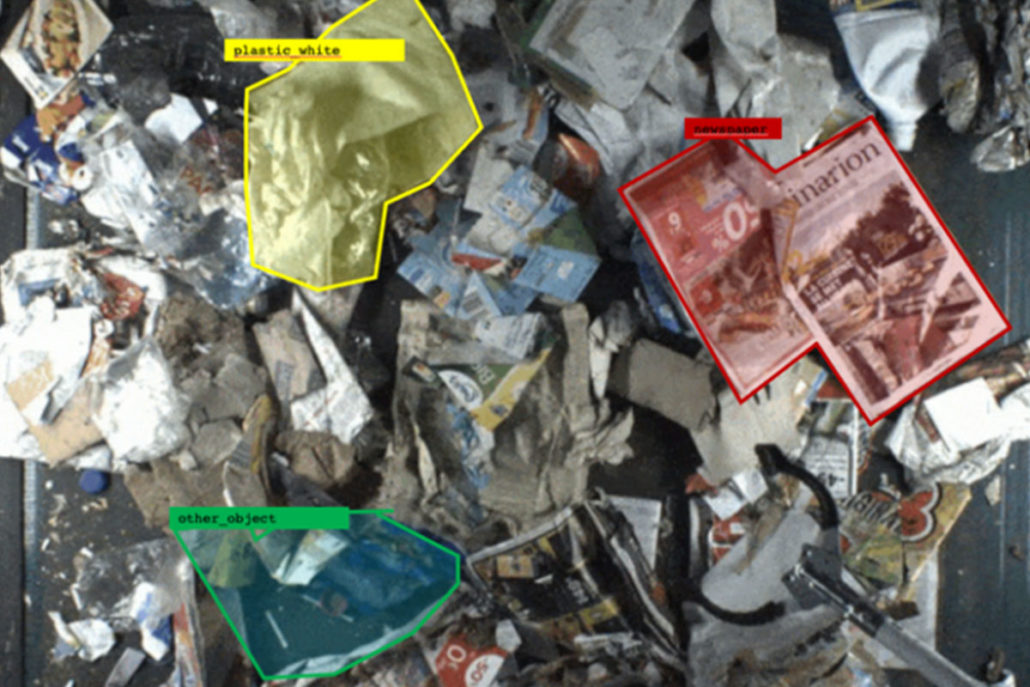

The reServer Industrial series is capable of processing multiple video streams in real-time, in order to apply high-performance filters for critical insights and practical actions accordingly.

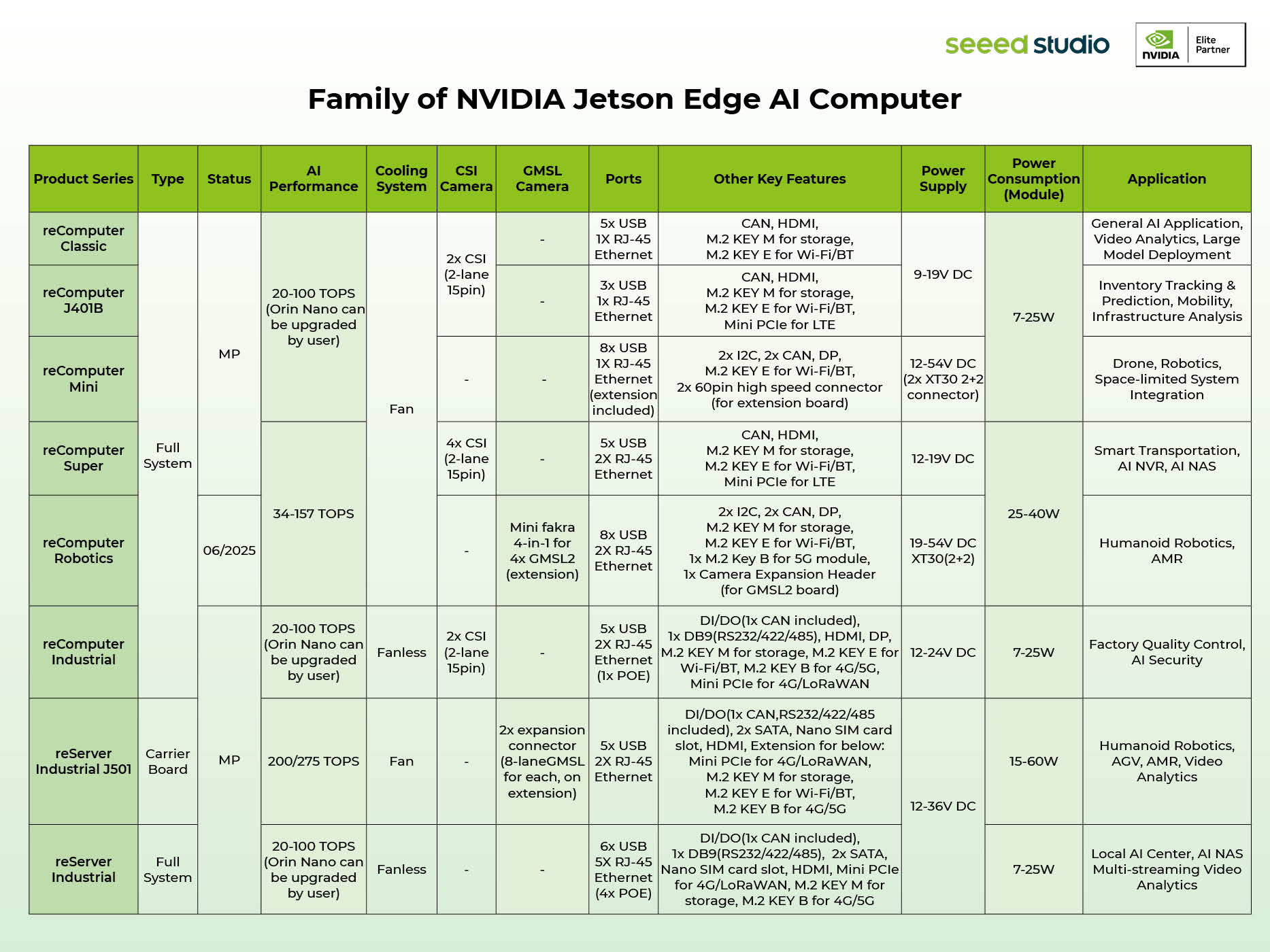

Explore the whole series of our Jetson GPU-Accelerated computing solutions for efficient data processing and insights – designed to fit your requirements, and supported by a rich ecosystem of software stacks and toolkits along with our partners.

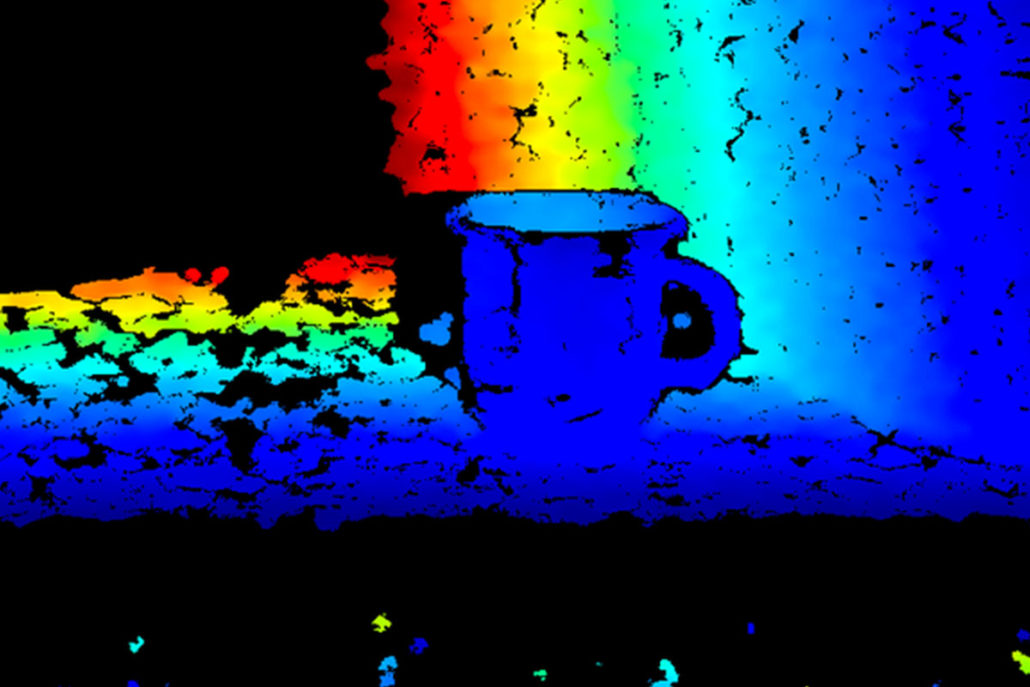

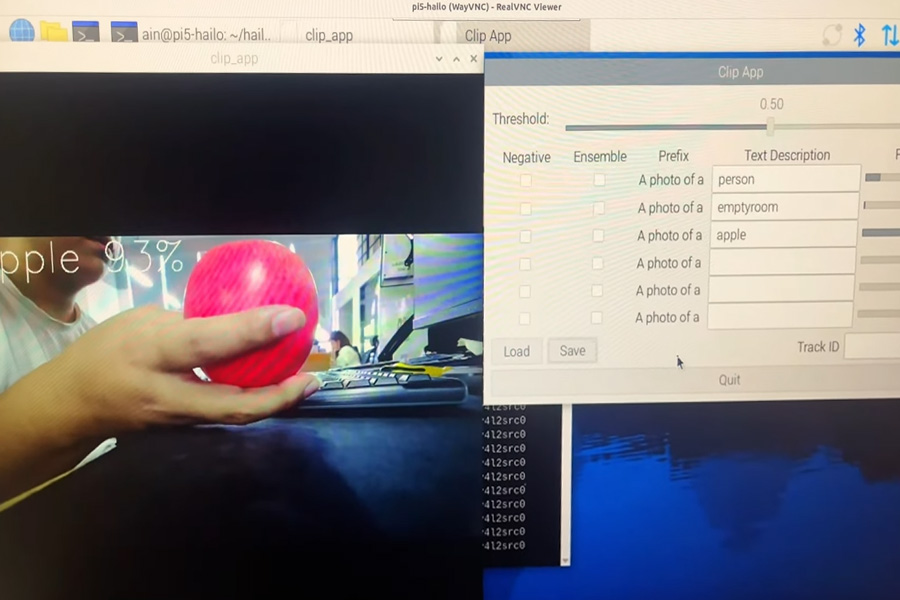

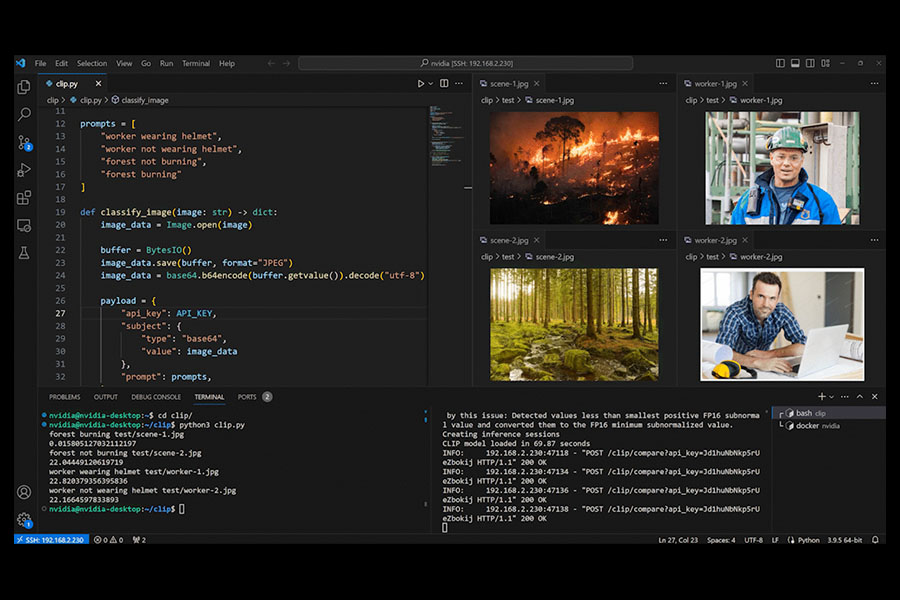

No-Code Vision AI with SenseCraft AI: Build Your Application in 1 Minute

Streamlined 1-Click Deployment

Access 300+ Vision AI apps from SenseCraft AI with instant deployment.

Seamless Seeed Hardware Compatibility

Works out-of-the-box with your existing Seeed devices.

No-Code Data-to-Model Pipeline

Collect and train vision AI models without coding expertise.

Open-Source Collaboration

Upload, share, and co-create models in our community-driven ecosystem.